SELF SERVICE PORTALS

Submit your assets within our self service portals.

Digital Display

Newsletters, building a more visually equitable future through representation.

The invention of the camera provided a new opportunity to represent truth.

But historically, camera technology has been calibrated for lighter skin tones, without consideration for the wide spectrum of human complexion. People with darker skin tones often feel excluded by camera technology, inaccurately pictured in memorable moments. Google’s Pixel 6 was the first smartphone on the market designed specifically to be inclusive. The Pixel 6 features Real Tone™ technology, including camera optimizations to more accurately highlight the nuances of diverse skin tones. This initiative to improve image equity through enhanced camera technology enables photographers to tell more authentic stories than ever before.

To place this groundbreaking technology into cultural context, and to raise awareness of the new Pixel 6, Google collaborated with T Brand Studio. We launched the “Picture Progress” campaign to show people where camera technology started, and the critical role inclusive technology like Real Tone™ plays in ensuring equitable visual representation in the future.

Left image: dolores huerta in 1965, photographed by harvey richards, left image: ruby bridges in 1960, left image: phill wilson in 1995, photographed by dr. ron simmons, next we looked to the future. the present to future phase put the pixel 6’s real tone™ technology into the hands of the bipoc photographers kennedi carter, mengwen cao and ricardo nagaoka, giving their art room to showcase the expressive power of accurate representation., “there is power in being seen, and there is strength in documenting our stories.”, —kennedi carter, “any tool that can help us tell more complete stories can help us imagine more complete solutions.”, —mengwen cao, “this is about being seen within places where we exist, but the media rarely shows us.”, —ricardo nagaoka.

The ad campaign came to life across The New York Times’s most dynamic media canvases in digital, print, audio and video. Its impact spanned beyond The Times’s owned and operated channels, appearing in prominent press coverage, at Google’s launch event, social channels, flagship New York City store and more.

Across digital and print, the Picture Progress ad campaign left an impression on our audience. Viewers were more likely to see Google as having a positive impact on society, and to consider purchasing the Pixel 6. Sales followed suit, and the Pixel 6 sold out soon after its release. The powerful work accomplished the equally meaningful task of igniting a conversation about image equity, turning the spotlight to a significant blind spot in representation and technology.

longer average video engagement than NYTA's internal benchmark

estimated campaign audience

higher Facebook CTR than NYTA’s internal benchmark

Related Case Studies

How we tested Guided Frame and Real Tone on Pixel

Feb 02, 2024

[[read-time]] min read

Google wants to make the world’s most inclusive camera, and that requires a lot of user feedback.

In October, Google unveiled the Pixel 8 and Pixel 8 pro . Both phones are engineered with AI at the center and feature upgraded cameras to help you take even more stunning photos and videos. They also include two of Google’s most advanced and inclusive accessibility features yet: Guided Frame and Real Tone . First introduced on the Pixel 7, Guided Frame uses a combination of audio cues, high-contrast animations and haptic (tactile) feedback to help people who are blind and low-vision take selfies and group photos. And with Real Tone, which first appeared on the Pixel 6, we continue to improve our camera tuning models and algorithms to more accurately highlight diverse skin tones.

The testing process for both of these features relied on the communities they’re trying to serve. Here’s the inside scoop on how we tested Guided Frame and Real Tone.

How we tested Guided Frame

The concept for Guided Frame emerged after the Camera team invited Pixel’s Accessibility group to attend an annual hackathon in 2021. Googler Lingeng Wang, a technical program manager focused on product inclusion and accessibility, worked with his colleagues Bingyang Xia and Paul Kim to come up with a novel way to make selfies easier for people who are blind or low-vision. “We were focused on the wrong solution: telling blind and low vision users where the front camera placement is,” Lingeng says. “After we ran a preliminary study with three blind Googlers, we realized we need to provide real-time feedback while these users are actively taking selfies.”

To bring Guided Frame beyond a hackathon, various teams across Google began working together, including the accessibility, engineering, haptics teams and more. First, the teams tried a very simple method of testing: closing their eyes and trying to take selfies. Even that inadequate test made it very obvious to sighted team members that “we’re under-serving these users,” said Kevin Fu, product manager for Guided Frame.

It was extremely important to everyone working on this feature that the testing centered on blind and low-vision users throughout the entire process. In addition to internal testers, which are called “dogfooders” at Google, the team involved Google’s Central Accessibility team and sought advice from a group of blind and low-vision Googlers.

After coming up with a first version, they asked blind and low-vision users to try the not-yet-released feature. “We started by giving volunteers access to our initial prototype,” says user experience designer Jabi Lue. “They were asked about things like the audio, the haptics — we wanted to know how everything was weaving together because using a camera is a real-time experience.”

Victor Tsaran, a Material Design accessibility lead, was one of the blind and low-vision Googlers who tested Guided Frame. Victor remembers being impressed by the prototype even then, but also noticed that it got better over time as the team heard and addressed his feedback. “I was also happy that Google Camera was getting a cool accessibility feature of this quality,” he says.

The team was soon experimenting with multiple ideas based on the feedback. “Blind and low-vision testers helped us identify and develop the ideal combination of alternative sensory feedback of audio cues, haptics and vibrations, intuitive sound and visual high-contrast elements,” says Lingeng. From there they scaled up, sharing the final prototype broadly with even more volunteer Googlers.

Kevin, Jabi and Lingeng (with his son) all taking selfies to test the Guided Frame feature. Victor using Guided Frame to take a selfie.

This testing taught the team how important it is for the camera to automatically take a photo when the person’s face is centered. Sighted users don’t usually like this, but blind and low-vision users appreciated not having to also find the shutter key. Voice instructions also turned out to be key, especially for selfie buffs aiming for the best composition: “People quickly knew if they were to the left, right or middle, and could adjust as they wanted to,” Kevin says. Allowing people to take the product home and test in everyday life allowed Guided Frame to help with both selfies and group pictures, which was something Kevin says they discovered users wanted, too.

The team wants to expand what Guided Frame can do, and it’s something they’re continuing to explore. “We’re not done working toward this journey of building the world's most inclusive, accessible camera,” Lingeng says. “It’s truly the input from the blind and low-vision community that made this feature possible.”

How we tested Real Tone

To make Real Tone better we worked with more people in more places, including internationally. The goal was to see how the Real Tone upgrade the team had been working on performed across more of the world, which required expanding who was testing these tools.

“We worked with image-makers who represented the U.K., Australia, India and Laos,” says Florian Koenigsberger, Real Tone lead product manager. “We really tried to have a more globally diverse perspective.” And Google didn’t just send a checklist or a survey — someone from the Real Tone team was there, working with the experts and collecting data. “We needed to make sure we represented ourselves to these communities in a real, human way,” Florian says. “It wasn’t just like, ‘Hey, show up, sign this paper, boom-boom-boom.’ We really made an effort to tell people about the history of this project and why we’re doing it. We wanted everyone to be respected in this process.”

Once on the ground, the Real Tone team asked these aesthetic experts to try to “break” the camera — in other words, to take pictures in places where the camera historically didn’t work for people with dark skin tones. “We wanted to understand the nuance of what was and wasn’t working, and take that back to our engineering team in an actionable way,” Florian explains.

Team members also asked — and were allowed — to watch the experts edit photos, which, for photographers, was a very big request: “That’s very intimate for them,” Florian says. “But then we could see if they were an exposure slider person, or a color slider person. Are you manipulating tones individually? It was really interesting information we took back.”

To get the best feedback, the team shared prototype Pixel devices very early — so early that the phones often crashed. “The Real Tone team member who was there had access to special tools and techniques required to keep the product running,” says Isaac Reynolds, the lead Product Manager for Pixel Camera.

Photos taken with Pixel 7 Pro. The image on the left was taken without Night Sight. The image on right was taken with Night Sight for Real Tone enhancements.

After collecting this information, the team tried to identify what Florian calls “headline issues” — things like hair texture that didn’t look quite right, or when color from the surrounding atmosphere seeped into skin. Then with input from the experts, they settled on what to work on for 2022. And then came the engineering — and more testing. “Every time you make a change in one part of our camera, you have to make sure it doesn’t cause an unexpected negative ripple effect in another part,” Florian says.

In the end, thanks to these partnerships with image experts internationally, Real Tone now works better in many ways, especially in Night Sight, Portrait Mode and other low-light scenes.

“I feel like we’re starting to see the first meaningful pieces of progress of what we originally set out to do here,” Florian says. “We want people to know their community had a major seat at the table to make this phone work for them.”

A version of this post was originally published in December 2022.

Related stories

The latest AI news we announced in December

Get the most out of Pixel Screenshots with these 8 tips

8 ways I use Google Home for cozy, festive holiday vibes

How I’m using Pixel to create my holiday card

Creating opportunity through computer science education

Explorecsr puts university departments on a path toward lasting change.

Today we’re announcing the 22 recipients in the 2024 cycle of the exploreCSR program.

Let’s stay in touch. Get the latest news from Google in your inbox.

Trusted Reviews is supported by its audience. If you purchase through links on our site, we may earn a commission. Learn more.

What is Google Real Tone? How the Pixel camera feature works

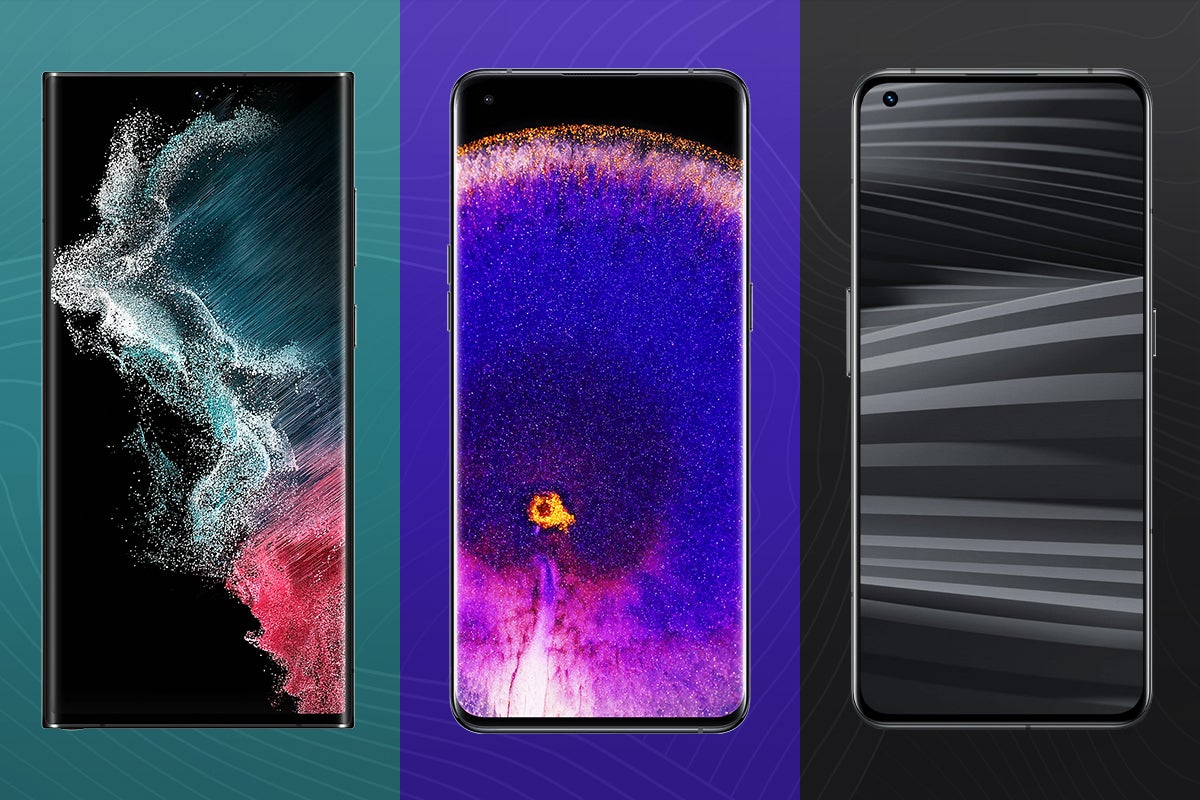

Google recently unveiled the Pixel 8 Pixel 8 Pro, and among its updated roster of features, the company kept circling back to its Real Tone capabilities – but it’s not a feature exclusive to the most recent models.

Instead, Google Real Tone has been a feature of many recent Pixel phones, down to the budget-focused Pixel 7a and all the way up to the Google Pixel Fold – though performance isn’t consistent among all Pixel phones, with the most recent Pixel 8 series delivering the most accurate skin tones yet.

With that in mind, here’s everything you need to know about Google Real Tone, including what it is, how it works and where you can find it…

What is Google Real Tone?

Real Tone is a Pixel camera feature designed to improve accuracy when reproducing darker skin tones where previous Google phones have fallen short.

Google has worked with more than 60 photographers of different ethnicities to train the Pixel camera to capture darker skin tones more accurately, preventing them from appearing darker or brighter than how they look in real life.

Real Tone is a part of Google’s ongoing effort to address skin tone bias in AI and camera technology, and it has continued to improve its performance since its original iteration on the Pixel 6.

“This racial bias in camera technology has its roots in the way it was developed and tested”, explains Google on its website .

“Camera sensors, processing algorithms, and editing software were all trained largely on data sets that had light skin as the baseline, with a limited scale of skin tones taken into account. This bias has lingered for decades, because these technologies were never adjusted for those with darker skin”.

Because Real Tone is baked into the Pixel’s camera, it is also supported on many third-party apps, like Snapchat.

How does it work?

Rather than being based on one single app or technology, Real Tone follows a framework that addresses six core areas in Google’s imaging technology.

First of all, the Pixel camera is trained to detect a diverse range of faces to ensure the camera can get an in-focus image in a variety of lighting conditions.

Next comes white balance. The auto-white balance is designed to better reflect a variety of skin tones.

The automatic exposure is responsible for how bright photos snapped on the Pixel looks, so Google has improved this as well.

Furthermore, the company developed a new algorithm to reduce the impact of stray light on an image, ensuring darker skin tones don’t appear washed out when framed by a sunlit window, for example.

Avoiding blur is also central to getting a good photo, so Google has used the AI in its custom-made Tensor chip to keep images sharp in low-light conditions.

Finally, the auto-enhancement feature in Google Photos works with photos taken with smartphones outside of the Pixel, meaning other Android users can optimise the colour and lighting in their images after taking them.

All of the above is applied to the Pixel’s camera, which is then tested by professionals to expand Google’s image datasets.

“We continue to work with image experts – like photographers, cinematographers, and colorists – who are celebrated for their beautiful and accurate imagery of communities of color”, said Google’s Image Equity Lead, Florian Koenigsberger.

“We ask them to test our cameras in a wide range of tough lighting conditions. In the process, they take thousands of portraits that make our image datasets 25 times more diverse – to look more like the world around us”.

More recently, Google has open-sourced the Monk Skin Tone (MST) scale, a scale developed by Harvard professor Dr Ellis Monk that is more inclusive and provides a broader spectrum of skin tones than the current tech industry standard.

Which Pixels support Real Tone?

Real Tone launched on the Pixel 6 in 2021 and can be found on the following phones:

- Pixel 8 Pro

- Pixel 7 Pro

- Pixel 6 Pro

You might like…

Best smartphones 2024: The best phones tested

Best camera phones 2024: Our top picks for mobile photography

Best Android Phone 2024: Our favourite iPhone alternatives

Hannah joined Trusted Reviews as a staff writer in 2019 after graduating with a degree in English from Royal Holloway, University of London. She’s also worked and studied in the US, holding positions …

Why trust our journalism?

Founded in 2003, Trusted Reviews exists to give our readers thorough, unbiased and independent advice on what to buy.

Today, we have millions of users a month from around the world, and assess more than 1,000 products a year.

Editorial independence

Editorial independence means being able to give an unbiased verdict about a product or company, with the avoidance of conflicts of interest. To ensure this is possible, every member of the editorial staff follows a clear code of conduct.

Professional conduct

We also expect our journalists to follow clear ethical standards in their work. Our staff members must strive for honesty and accuracy in everything they do. We follow the IPSO Editors’ code of practice to underpin these standards.

Sign up and get the best deals straight to your phone

Skip the hunt - get the latest discounts delivered directly to WhatsApp by signing up to the Trusted deals Whatsapp Channel.

Join Chrome Unboxed Plus

Our revamped membership community

- Skip to main content

- Skip to primary sidebar

Comments currently disabled due to technical issues with Disqus

Chrome Unboxed - The Latest Chrome OS News

A Space for All Things Chrome, Google, and More!

Latest Giveaway: Samsung Galaxy Chromebook Go! Enter To Win Here!

Google’s Real Tone technology wins top prize in Mobile category at Cannes Lions

June 23, 2022 By Johanna Romero View Comments

Google has garnered much praise from both its users and the media for its new “Real Tone” technology that launched alongside the Pixel 6 line and sought to improve the representation of diverse skin tones across all of Google’s products . To achieve this, Google partnered with Dr. Ellis Monk to develop the “Monk Skin Tone Scale,” which was designed to be more inclusive of the variety of skin tones in our society.

As part of its marketing efforts to bring the good tidings to the masses, Google launched a campaign that was featured in the Super Bowl this year with recording artist Lizzo singing to the tune of “If you love me, you love all of me.” The 60-second ad spot, shown below, brought awareness to the fact that camera technology in the past had failed to capture true skin tones when it came to those with a darker complexion and included testimonials from real-life Pixel 6 owners who were finally able to see full richness of their darker skin tone on a photo – thanks to Google’s True Tone.

The technology, and its subsequent ad campaign, were submitted for consideration to the Cannes Lions International Festival of Creativity . Not to be confused with the widely known Cannes Film Festival, this festival awards advertising films that bring forth new ideas that shape the next wave of creativity. In the case of the Mobile Lions, the category in which “Real Tone” was entered celebrates device-driven creativity.

Google’s idea was simple — design an inclusive camera with a strong focus on people with darker skin tones and test this camera and the Google Photos editor in a wide range of tough lighting conditions. And that it did. After consideration from the judges, Google was indeed awarded the top “Grand Prix” prize in the Mobile category , managing to impress with its innovative use of technology and advanced learning technologies. Hugo Veiga, Global Chief Creative Officer at AKQA, who led the jury for this category, said, “ When we got to the Grand Prix, practically everyone raised their arms for this idea. This was an idea that simply portrays reality, and what a huge step that is. “

Sources: AdAge | Cannes Lions

advertisement

comments before author

The saying “the camera cannot lie” is almost as old as photography itself. But it’s never actually been true.

Skin tone bias in artificial intelligence and cameras is well documented. And the effects of this bias are real: Too often, folks with darker complexions are rendered unnaturally – appearing darker, brighter, or more washed out than they do in real life. In other words, they’re not being photographed authentically.

That’s why Google has been working on Real Tone. The goal is to bring accuracy to cameras and the images they produce. Real Tone is not a single technology, but rather a broad approach and commitment – in partnership with image experts who have spent their careers beautifully and accurately representing communities of colour – that has resulted in tuning computational photography algorithms to better reflect the nuances of diverse skin tones.

It’s an ongoing process. Lately, we’ve been making improvements to better identify faces in low-light conditions. And with Pixel 8, we’ll use a new colour scale that better reflects the full range of skin tones.

Photographed on Pixel 7 Pro

Photographing skin tones accurately isn’t a trivial issue. Some of the most important and most frequently appearing subjects in images are people. Representing them properly is important.

“Photos are symbols of what and who matter to us collectively,” says Florian Koenigsberger, Google Image Equity Lead, “so it’s critical that they work equitably for everyone – especially for communities of colour like mine, who haven’t always been seen fairly by these tools.”

Real Tone isn’t just about making photographs look better. It has an impact on the way stories are told and how people see the world – and one another. Real Tone represents an exciting first step for Google in acknowledging that cameras have historically centered on light skin. It’s a bias that’s crept into many of our modern digital imaging products and algorithms, especially because there hasn’t been enough diversity in the groups of people they’re tested with.

This racial bias in camera technology has its roots in the way it was developed and tested. Camera sensors, processing algorithms, and editing software were all trained largely on data sets that had light skin as the baseline, with a limited scale of skin tones taken into account. This bias has lingered for decades, because these technologies were never adjusted for those with darker skin.

In 2020, Google began looking for ways to change this, with the goal of making photography more fair. We made a number of improvements, notably to the way skin tones are represented. We expanded that program again in 2021 to introduce even more enhancements across exposure, colour, tone-mapping, face detection, face retouching, and more, in many apps and camera modes.

It was a group effort, because building better tools for a community works best when they’re built with the community.

“We continue to work with image experts – like photographers, cinematographers, and colourists – who are celebrated for their beautiful and accurate imagery of communities of colour,” says Koenigsberger. “We ask them to test our cameras in a wide range of tough lighting conditions. In the process, they take thousands of portraits that make our image datasets 25 times more diverse – to look more like the world around us.”

Photographed on Pixel 7

Photographed on Pixel 6a

The result of that collaboration isn’t an app or a single technology, but a framework that we’re committing to over many years, across all of our imaging products. Real Tone is a collection of improvements that are part of Google’s Image Equity Initiative, which is focused on building camera and imaging products that work equitably for people of colour, so that everyone feels seen, no matter their skin tone. Here are some of the things we’re doing to help make pictures more authentic:

Recognise a broader set of faces. Detecting a person’s face is a key part of getting a great photo that’s in focus. We’ve diversified the images we use to train our models to find faces more successfully, regardless of skin tone and in a variety of lighting conditions.

Correctly represent skin color in pictures. Automatic white balance is a standard camera feature that helps set the way colours appear in an image. We worked with various partners to improve the way white balance works to better reflect a variety of skin tones.

Make skin brightness appear more natural. Similarly, automatic exposure is used to determine how bright a photograph appears. Our goal with Real Tone was to ensure that people of colour do not appear unnaturally darker or brighter, but rather exactly as they really are.

Reduce washed-out images. Stray light in a photo setting can wash out any image – such as when you are taking a picture with a sunlit window directly behind the subject. This effect is even greater for people with darker skin tones, often leaving their faces in complete shadow. A new algorithm Google developed aims to reduce the impact of stray light on finished images.

Sharpen images even in low light. We discovered that photos of people with darker skin tones tend to be more blurry than normal in low light conditions. We leveraged the AI features in the Google Tensor chip to sharpen photos, even when the photographer’s hand isn’t steady and when there isn’t a lot of light available.

More ways to tune up any photo. The auto-enhancement feature in Google Photos works with uploaded photos that were taken any time and with any camera, not just Pixel. It optimises colour and lighting in any picture, across all skin tones.

Collectively, all of these improvements work together to make every picture more authentic and more representative of the subject of the photo – regardless of their skin tone.

This is an area we’ve been focused on improving for some time, and we still have a lot of work to do. Making photography more inclusive isn’t a problem that’s solved in one go – rather, it’s a mission we’re committed to. We’re now looking at images from end to end, from the moment the photo is taken to how it shows up in other products. This will have ongoing implications for multiple products as we continue to improve.

Recently, Google partnered with Dr. Ellis Monk, a Harvard professor and sociologist who for more than a decade has studied the way skin tone impacts people’s lives. The culmination of Dr. Monk’s research is the Monk Skin Tone (MST) Scale , a 10-shade scale that has been incorporated into various Google products. The scale has also been made public, so anyone across the industry can use it for research and product development. We see this as a chance to share, learn, and evolve our work with the help of others, leading to more accurate algorithms, a better way to determine representative datasets, and more granular frameworks for our experts to provide feedback.

“In our research, we found that a lot of the time people feel they’re lumped into racial categories, but there’s all this heterogeneity with ethnic and racial categories,” Dr. Monk has said . “And many methods of categorisation, including past skin tone scales, don’t pay attention to this diversity. That’s where a lack of representation can happen… we need to fine-tune the way we measure things, so people feel represented.”

Inclusive photography is a work in progress. We’ll continue to partner with experts, listen to feedback, and invest in tools and experiences that work for everyone. Because everyone deserves to be seen as they are.

IMAGES

COMMENTS

Google’s Pixel 6 was the first smartphone on the market designed specifically to be inclusive. The Pixel 6 features Real Tone™ technology, including camera optimizations to more accurately highlight the nuances of diverse skin tones.

Google’s mission is to make our camera and image products work more equitably for everyone. Historically, camera technology has excluded people of color, resulting in unflattering photos for those with darker skin tones. We improved our camera tuning models and algorithms to more accurately highlight diverse skin tones with Real Tone software.

Real Tone is a part of Google’s product inclusion and equity efforts to address skin tone bias in camera technology Google worked with the community and used more images of people of color to train Pixel’s camera By optimizing settings, people with darker skin tones won’t appear darker or brighter, but exactly as they really are

Feb 2, 2024 · And with Real Tone, which first appeared on the Pixel 6, we continue to improve our camera tuning models and algorithms to more accurately highlight diverse skin tones. The testing process for both of these features relied on the communities they’re trying to serve. Here’s the inside scoop on how we tested Guided Frame and Real Tone.

Google - Real Tone (Case Study) | Campaign1. BACKGROUNDHistorically, camera technology has excluded people of color, especially those with darker skin tones....

Oct 4, 2023 · Real Tone is a part of Google’s ongoing effort to address skin tone bias in AI and camera technology, and it has continued to improve its performance since its original iteration on the Pixel 6.

In contrast, Google Pixel 6 with Real Tone technology... Real Tone Google Pixel Historically, cameras haven't accurately represented people of darker skin tones. ︎

Jun 23, 2022 · In the case of the Mobile Lions, the category in which “Real Tone” was entered celebrates device-driven creativity. Google’s “Real Tone” entry for consideration at Cannes Lions

Real Tone is making camera and image technology work more equitably for everyone. To showcase these benefits to policymakers in Washington, DC, Google’s Civics team asked to create a captivating photo activation that could easily transport and activate across multiple event formats.

Recently, Google partnered with Dr. Ellis Monk, a Harvard professor and sociologist who for more than a decade has studied the way skin tone impacts people’s lives. The culmination of Dr. Monk’s research is the Monk Skin Tone (MST) Scale, a 10-shade scale that has been incorporated into various Google products. The scale has also been made ...