- Account Details

- X-Large Mix

- Mixing Addons

- Audio Mastering

- Mastering Addons

- How It Works

- Upload Audio

- Request Revision

- Rate Our Services

- Audio Waveforms Explained | Insights For Audio Editors 2024

by Mixing Monster | Last updated Dec 21, 2024

Home > Blog > Editing > Editing Insights

Affiliate Disclaimer: We may earn a commission if you purchase through our links

Audio waveforms are the backbone of sound editing and engineering. They visually represent sound by displaying changes in amplitude and frequency, which is crucial for understanding how sound behaves in different environments. This intricate representation allows audio editors to precisely manipulate sound, tailoring it to specific needs and contexts.

Audio waveforms are fundamental in audio editing and engineering. Their visual representation of sound – depicting amplitude and frequency variations – is a critical tool for audio professionals. Understanding and manipulating audio waveforms is essential for creating high-quality audio content.

The exploration of audio waveforms reveals various possibilities for sound manipulation. As we delve deeper, we uncover the concept and science behind these fascinating sound signatures.

Table Of Contents

- 1. The Fundamentals Of Audio Waveforms

- 2. Physics Behind Audio Waveforms

- 3. Types Of Audio Waveforms

- 4. Acoustics And Audio Waveforms

- 5. Waveforms In Analog And Digital Audio

- 6. Editing Techniques For Audio Waveforms

- 7. Audio Waveforms In Mixing And Mastering

- 8. Audio Waveforms: Key Takeaways And Future Directions

- Visualizing Sound: An Introduction To Waveform Graphics

Waveform graphics provide a visual representation of precisely these sound characteristics. They illustrate the amplitude and frequency of sound waves over time, allowing audio engineers to analyze and manipulate sound in a more tangible form.

Delving deeper into the science of sound, this section examines the physical principles governing audio waveforms. Understanding these concepts is vital for any audio professional seeking to master the craft of sound editing and engineering .

- Sound Wave Propagation

Sound waves propagate through various mediums (like air, water, and solids) as vibrations . These vibrations create longitudinal waves characterized by compressions and rarefactions, allowing the sound to travel over distances.

The speed of sound varies depending on the medium, affecting how the waveform is perceived.

- The Relationship Between Frequency And Pitch

Frequency , a fundamental aspect of sound waves, directly affects the pitch of our sound. Higher frequencies result in higher pitches, while lower frequencies lead to lower pitches. This relationship is pivotal in music and audio editing , as it determines the tonal quality of the sound.

- Harmonics And Overtones Explained

Harmonics and overtones are essential elements in the timbre or color of sound. A fundamental frequency produces harmonics , which are multiples of this original frequency.

Overtones , which can be harmonic or inharmonic, add richness and complexity to the sound, playing a crucial role in distinguishing different sounds and instruments.

Comparison Of Waveform Types

Understanding these basic waveform types and their sonic qualities is crucial for anyone engaged in sound design and audio editing . Each waveform brings a unique flavor to the sound palette, allowing various creative possibilities in music production and audio engineering .

In this section, we explore the interaction between audio waveforms and their environments, focusing on acoustics, which significantly impact how sound is perceived and manipulated in audio engineering .

- Room Acoustics And Waveform Behavior

Room acoustics play a vital role in how sound waves behave. A room’s size, shape, and materials can significantly affect sound waves’ absorption, reflection, and diffusion, altering waveform representations.

Understanding these acoustic properties is essential for optimizing recording and playback environments.

- The Impact Of Materials On Sound Waves

Different materials have varying effects on sound waves.

Hard surfaces like concrete and glass reflect sound, creating echoes and reverberations , while softer materials like foam and carpet absorb sound, reducing echoes.

These material characteristics must be considered when setting up a recording studio or optimizing a listening space.

- Understanding Echoes And Reverberations

Echoes and reverberations result from the interaction of sound waves with their environment.

Echoes are reflections of sound arriving at the listener’s ear after a delay, while reverberations are the persistence of sound in a space after the original sound has stopped.

Both can be creatively used or managed in audio editing and room acoustics design.

- Transients In Audio Waveforms Explained

Transients are short, high-energy bursts of sound that occur at the beginning of a waveform, like the strike of a drum or the pluck of a guitar string.

In digital audio, capturing these transients accurately is crucial for a realistic sound representation.

Learn more about audio transients here:

What Are Audio Transients | How To Treat Transients

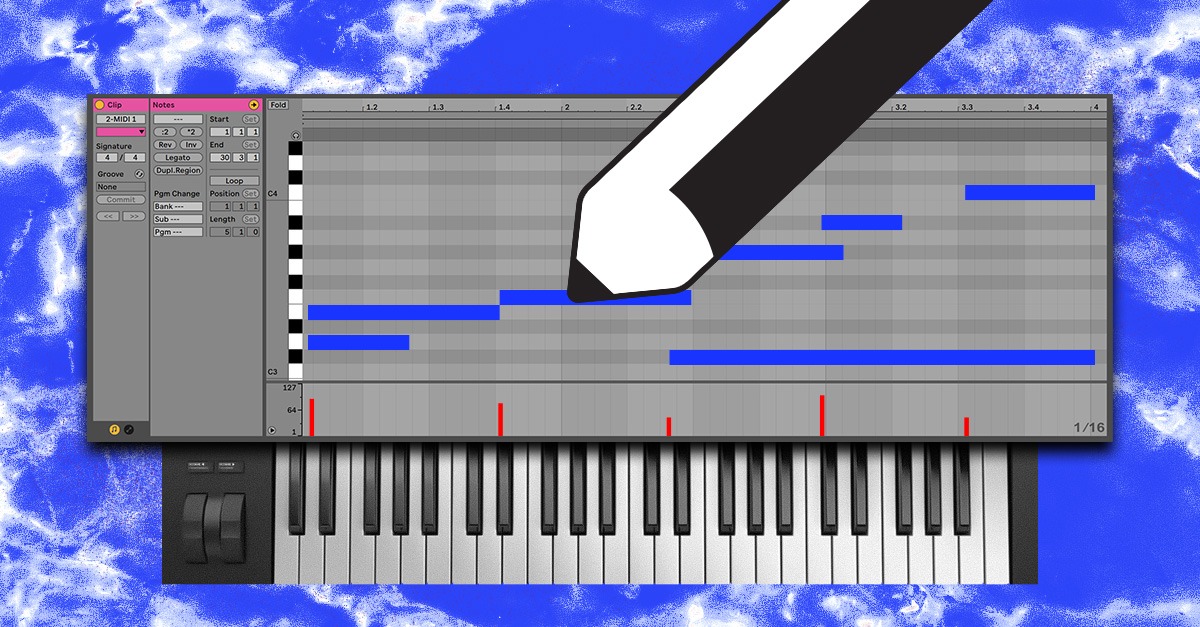

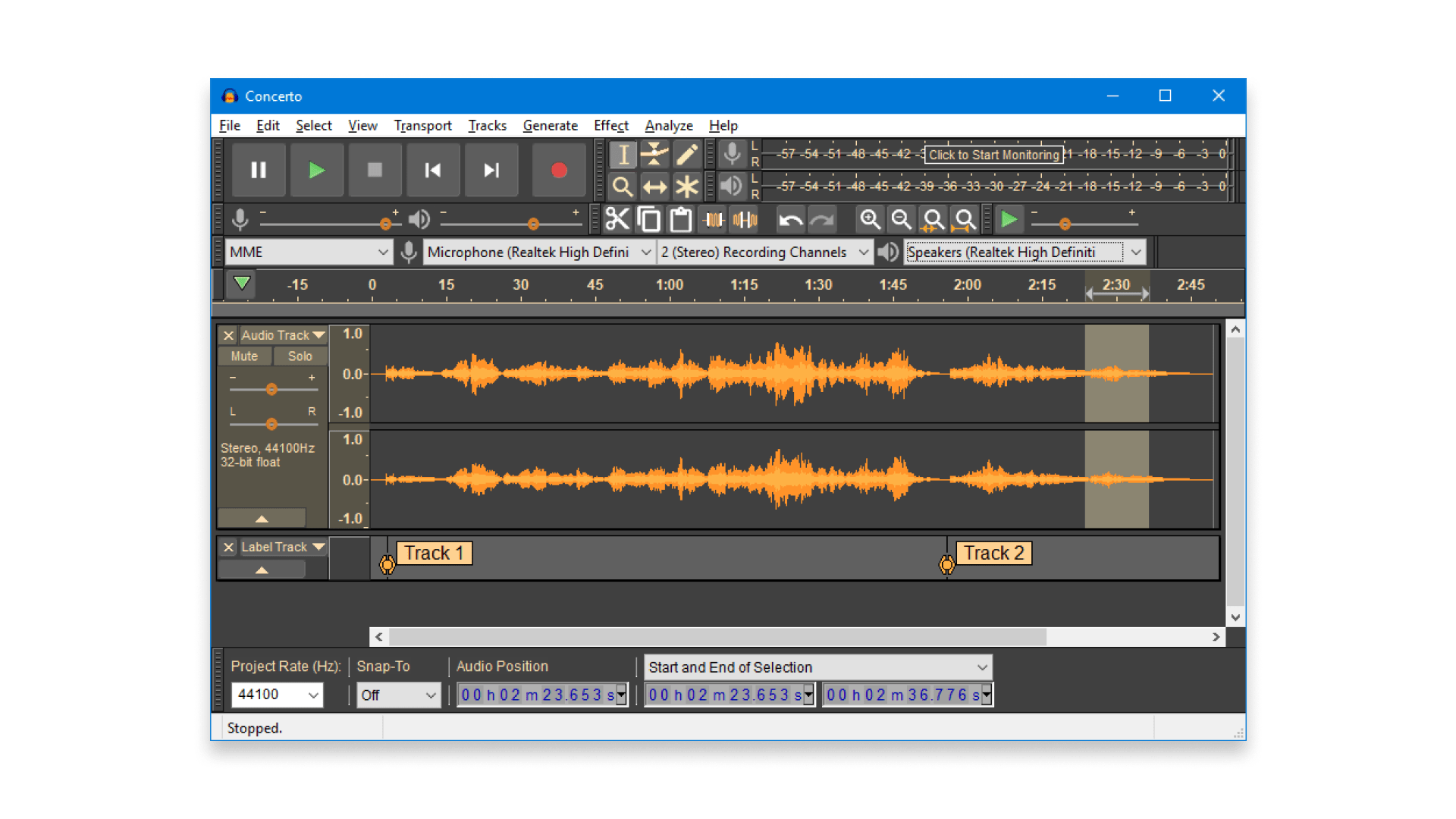

- The Role Of Digital Audio Workstations (DAWs)

DAWs are integral to modern audio editing , providing tools to manipulate digital waveforms.

They allow for precise editing, effects application, and mixing , making them indispensable in audio production .

This section is dedicated to the practical aspects of manipulating audio waveforms in editing . It covers a range of basic to advanced techniques suitable for novice and experienced audio editors.

- Basic Audio Waveform Manipulation Techniques

Fundamental techniques include trimming, fading, and adjusting gain. Trimming alters the length of an audio clip, fading helps in the smooth transition of sound, and gain adjustmen t controls the waveform’s amplitude. These are the starting points for any audio editing project.

- Advanced Editing: Compression And Equalization

Compression reduces the dynamic range of an audio signal, making loud and quiet sounds quieter, which can help achieve a balanced mix.

Equalization (EQ) allows the editor to boost or cut specific frequencies, shaping the tonal balance of the audio.

- Restoring And Enhancing Audio Quality

Audio restoration involves removing unwanted noises like hisses, clicks, or hums and can be particularly challenging.

Enhancing audio quality might involve:

- Techniques like stereo widening .

- Adding reverb .

- Using harmonic exciters to add richness and depth to the sound.

- Common Mistakes In Audio Waveform Editing

It’s essential to be aware of common pitfalls in audio editing , such as over- compression , excessive EQ , or ignoring phase issues. Understanding these mistakes helps in avoiding them and achieving a more professional result.

Quick Tips For Effective Audio Waveform Editing

- Start With Clean Audio Ensure your recordings are as clean as possible before editing.

- Monitor Levels Carefully Keep an eye on your levels to avoid clipping.

- Use EQ Sparingly Equalize gently; avoid overdoing it.

- Compress With Caution Use compression to balance levels, but don’t overcompress.

- Listen On Different Systems Check your edits on various audio systems for consistency.

- Save Originals Always keep a copy of the original files before making edits.

- Take Breaks Regular breaks help maintain a fresh perspective. Do it!

- Trust Your Ears Your ears are your best tool; if it sounds right, it usually is.

This section focuses on managing audio waveforms in the critical mixing and mastering stages, emphasizing how waveform analysis can enhance these processes.

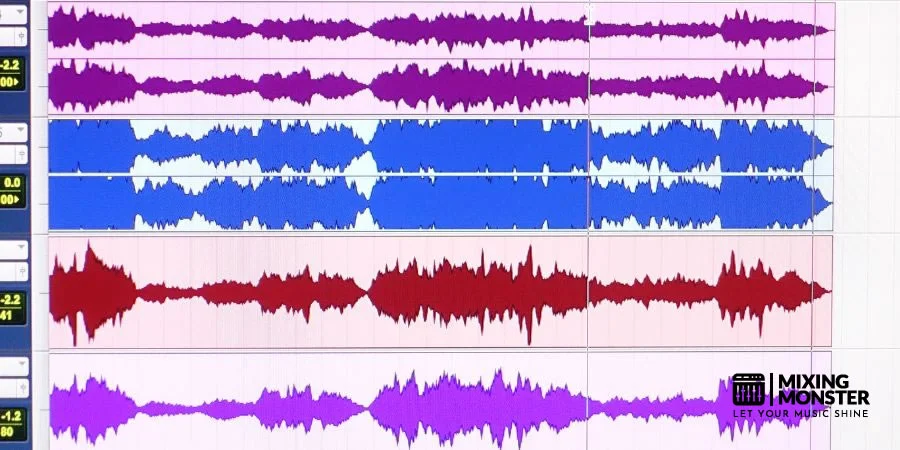

- Analyzing Tracks Using Waveforms

In mixing , visual analysis of waveforms can be as critical as listening. It helps identify issues like clipping, phase problems, and timing discrepancies. Waveform analysis is essential to ensuring tracks are well-aligned and dynamically balanced.

- Balancing Tracks Using Waveforms

Balancing tracks involves adjusting levels, panning , and dynamics to create a harmonious mix. Waveforms provide a visual guide to these elements, helping to achieve a balanced and cohesive sound.

For example, ensuring that waveforms of different instruments complement rather than compete.

- Utilizing Waveforms For Stereo Imaging

Stereo imaging involves the placement of sounds within the stereo field to create a sense of width and depth. Waveforms can indicate stereo spread and help make informed decisions about panning and stereo enhancement techniques.

As we conclude our deep dive into audio waveforms, it’s clear that these fundamental sound elements are more than just technicalities—they are the art and science of audio engineering personified.

- The Essence Of Audio Waveforms

Reflecting on our journey through the intricacies of audio waveforms, we recognize their pivotal role in shaping the sounds that define our experiences.

From the pure tones of sine waves to the complex harmonies of sawtooth waves, understanding waveforms is akin to understanding the language of sound itself.

- Innovations On The Horizon

The field of audio engineering is continually evolving, with new technologies and methodologies emerging regularly.

Innovations like AI-assisted editing, advanced digital synthesis, and immersive audio formats are set to revolutionize how we interact with waveforms, offering even more precision and creative freedom.

- A Parting Note: The Art Of Listening

Above all, the most crucial skill in our toolkit remains our ability to listen —not just to the sound itself, but to the story it tells through its waveform.

As we ride the ever-changing audio wave, remember that at the heart of every great sound is an even more excellent listener.

- Featured Articles

- You Might Also Be Interested In

- Share This Article!

- Thank You For Reading!

Thank you for reading our post! We hope you found the insights both informative and valuable. We welcome your thoughts and concerns on the topic, so please feel free to contact us anytime. We’d love to hear from you!

Mixing Monster is a professional music studio with over 25 years of experience in music production and audio engineering. We help people worldwide achieve the perfect tone for their music.

Pin It on Pinterest

Help | Advanced Search

Computer Science > Multimedia

Title: from vision to audio and beyond: a unified model for audio-visual representation and generation.

Abstract: Video encompasses both visual and auditory data, creating a perceptually rich experience where these two modalities complement each other. As such, videos are a valuable type of media for the investigation of the interplay between audio and visual elements. Previous studies of audio-visual modalities primarily focused on either audio-visual representation learning or generative modeling of a modality conditioned on the other, creating a disconnect between these two branches. A unified framework that learns representation and generates modalities has not been developed yet. In this work, we introduce a novel framework called Vision to Audio and Beyond (VAB) to bridge the gap between audio-visual representation learning and vision-to-audio generation. The key approach of VAB is that rather than working with raw video frames and audio data, VAB performs representation learning and generative modeling within latent spaces. In particular, VAB uses a pre-trained audio tokenizer and an image encoder to obtain audio tokens and visual features, respectively. It then performs the pre-training task of visual-conditioned masked audio token prediction. This training strategy enables the model to engage in contextual learning and simultaneous video-to-audio generation. After the pre-training phase, VAB employs the iterative-decoding approach to rapidly generate audio tokens conditioned on visual features. Since VAB is a unified model, its backbone can be fine-tuned for various audio-visual downstream tasks. Our experiments showcase the efficiency of VAB in producing high-quality audio from video, and its capability to acquire semantic audio-visual features, leading to competitive results in audio-visual retrieval and classification.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

Understanding Audio Waveforms: A Comprehensive Guide

Audio waveforms are the visual representations of sound waves. Simply put, they show us how sounds change over time. Understanding audio waveforms is crucial in many areas, such as: B. in music production, sound engineering and data analysis. In this comprehensive guide, we'll explore the intricacies of audio waveforms, their influence on technology and society, the key concepts and definitions you need to know, and how to visualize data using waveform images. Additionally, we will demystify the jargon and provide you with a comprehensive guide to technical terminology. Whether you are a beginner or an expert in the field, this guide will deepen your understanding of audio waveforms and their role in the modern world.

Challenge your understanding

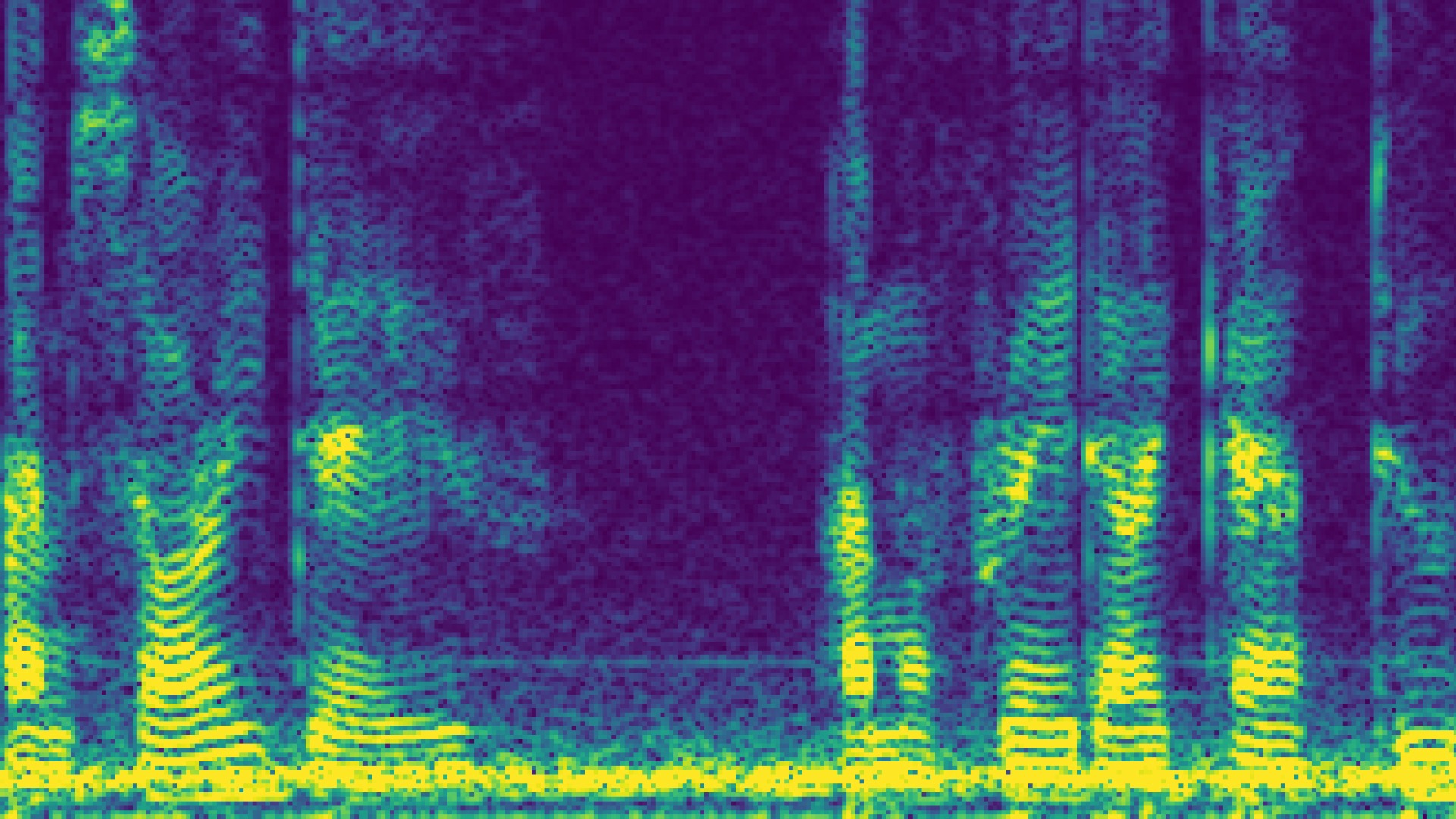

Before we delve into the details, let's first test your understanding of audio waveforms. Take a moment and analyze the waveform below:

Do you notice any patterns or irregularities in the waveform? What conclusions can you draw about the sound it represents? Take time to analyze and take notes; we will discuss this in more detail later.

As you examine the waveform, you may notice various patterns such as peaks and troughs that indicate changes in amplitude. These amplitude changes correspond to the loudness or intensity of the sound at different times. You may also notice distinct cycles or repetitions that indicate a periodic sound wave. The waveform can also provide information about the frequency of the sound, as the distance between each cycle indicates the time it takes for a complete oscillation.

Additionally, irregularities in the waveform, such as sudden peaks or dips, can indicate abrupt changes in sound, such as: B. a sudden increase or decrease in volume. These irregularities can also indicate the presence of overtones that contribute to the timbre or tonal quality of the sound.

Put your knowledge to the test

Now that you've learned the basics of audio waveforms, put your knowledge to the test with a few questions:

- What is the main purpose of audio waveforms?

- What can audio waveforms tell you about a sound?

- What are some practical applications for understanding audio waveforms?

Take a moment to consider your answers before continuing. We will provide detailed explanations shortly.

1. The main purpose of audio waveforms is to visually represent the changes in amplitude and frequency of a sound wave over time. By visually representing audio waveforms, we can better analyze and understand the properties of a sound.

2. Audio waveforms can reveal different aspects of a sound, such as: B. its volume, pitch, duration and timbre. By studying the waveform, we can determine the intensity of the sound at different times, identify the frequency of the sound wave, and even distinguish between different types of sounds based on their unique waveform patterns.

3. Understanding audio waveforms has numerous practical applications. In music production, sound engineers use waveforms to edit and mix tracks to ensure that the volume is balanced and the desired effects are achieved. In speech analysis, waveforms help researchers examine vocal patterns and detect speech disorders. In the field of acoustics, audio waveform analysis can also aid in noise reduction, architectural design, and the development of audio compression algorithms.

The influence of technology

Technology has revolutionized the way we create, analyze and interact with audio waveforms. In this section, we will explore the role of technology in modern society and its influence on audio waveforms.

Exploring the role of technology in modern society

In recent years, technological advances have radically changed the field of audio waveforms. With the advent of digital audio workstations (DAWs) and dedicated software, artists and producers can now manipulate, edit and enhance audio waveforms with unprecedented precision.

Additionally, the widespread adoption of portable recording devices and streaming platforms has democratized access to audio production, allowing anyone with a Smartphone You can create your own waveforms and share them with others.

Technology has also played a crucial role in the area of data analysis. By converting audio signals into visual waveforms, analysts can extract valuable insights and patterns from large data sets. This has proven particularly useful in areas such as speech recognition, music recommendation algorithms and acoustics research.

Important concepts and definitions

Before diving deeper into the world of audio waveforms, there are a few important concepts and definitions you should understand. Familiarizing yourself with these terms will help you better understand the intricacies of audio waveforms.

Understand basic technical terms

Below are some important technical terms that are commonly used when discussing audio waveforms:

These are just a few of the most important terms you'll come across when exploring audio waveforms. As you familiarize yourself with these concepts, you will be better equipped to understand the intricacies discussed in the following sections.

Visualizing data with waveform images

Visualizing audio data can provide valuable insights and facilitate analysis. In this section, we will explore how waveform images improve data analysis and the different tools available for creating waveform images.

How waveform images improve data analysis

Waveform images provide a comprehensive visual representation of audio data. By looking at waveforms, analysts can identify patterns, anomalies, and various sound characteristics. This visual representation simplifies the understanding and interpretation of complex audio data.

Waveform images also help with audio editing and manipulation. By zooming in and out of specific sections of the waveform, producers and engineers can precisely edit audio, remove noise, or enhance specific elements. This level of control results in higher quality audio recordings and productions.

In addition, waveform images are widely used to illustrate and explain audio-related content in various teaching materials, tutorials and online courses. They provide a clear and concise visual aid that allows students and learners to better understand the concepts being taught.

Demystifying technical jargon

In the world of audio waveforms, understanding the associated jargon is crucial. In this section, we provide a comprehensive guide to technical terminology so you can easily navigate discussions and resources.

A comprehensive guide to technical terminology

Here are some additional technical terms to add to your audio waveform lexicon:

- Waveform display

- Transient processes

- Spectral analysis

Familiarizing yourself with these terms and their meanings will help you better understand and discuss your audio waveforms.

In this comprehensive guide, we've explored the world of audio waveforms, their impact on technology and society, key concepts and definitions, how to use waveform images in data analysis, and demystifying the jargon often used in discussions about audio waveforms. By developing a thorough understanding of audio waveforms, you can unlock new possibilities in music production, audio engineering, data analysis, and more. With this knowledge, you are now able to begin your journey into the world of audio waveforms with confidence and expertise.

How helpful was this post?

Click on the stars to rate!

Average rating 1.3 / 5. Number of reviews: 4

No reviews yet! Be the first to rate this post.

We are sorry that this post was not helpful to you!

Let's improve this post!

How can we improve this post?

Popular Posts

The difference between relative and absolute links is explained 4.3 (3)

Unlocking the Power of USB: A Comprehensive Guide and Definition 4 (4)

User ID vs Username: What's the Difference and Why Does It Matter? 3.9 (14)

Leave a Comment Cancel reply

Your e-mail address will not be published. Required fields are marked with * marked

Submit a comment

Related Articles

What is an email address and how to format it correctly 5 (3)

Understanding Hardware: Comprehensive Definition and Guide 5 (2)

Mastering Audio Visualization: A Beginner's Guide to Waveforms

Looking for our Text to Speech Reader ?

Featured In

Table of contents, understanding the basics: visualization and sound waves, how does an audio waveform generator work, social media, music production, youtube videos, sound engineering, visual appeal, professional touch, informative, speechify ai video editor, echowave.io, choosing and using your audio waveform tool, customizing your video, exporting your audio waveform video, how can you generate an audio waveform, what are the steps to incorporate a waveform into audio, how do you craft a soundwave qr code, why would you adjust the amplitude of an audio waveform, can you explain the contrast between a sound wave and an audio wave, what are the basic types of waveforms, what are the advantages of using an audio waveform generator, how is a waveform different from a spectrum, what functionality does a waveform editor provide, how can you visualize an audio spectrum in after effects, can you add audio waveforms to videos on iphone, how does a music visualizer work, can video editing software generate a waveform visualization.

Audio waveforms are graphical representations of audio signals that display changes in a signal's amplitude over a period of time. They play a vital role...

Audio waveforms are graphical representations of audio signals that display changes in a signal's amplitude over a period of time. They play a vital role in understanding and manipulating audio content, from podcast creation to music videos.

What is an Audio Waveform?

An audio waveform serves as a snapshot of a sound's overall dynamic range and helps us comprehend the intricacies of sound waves. To understand this better, let's delve into the basics of visualization and sound waves.

Visualization involves converting sound waves into a graphical format that can be understood easily. Sound waves are vibrations that travel through the air or another medium and can be heard when they reach a person's or animal's ear. They have characteristics like amplitude (loudness) and frequency (pitch), which when graphically represented, form an audio waveform. This waveform serves as a visual guide to the audio file, making it easier to edit, navigate, and understand the audio content.

An audio waveform generator creates visual representations of sound waves from audio files. It analyzes the audio file, breaks it down into various segments, and presents each segment's amplitude and frequency as a visual graph. These generators are especially helpful in video editing and music production, where precise editing and synchronization are necessary.

Usage of Audio Waveform

Audio waveforms are a universal tool employed across various sectors, demonstrating their wide-ranging functionality. In essence, they act as visual aids that simplify the audio processing workflow.

The podcasting realm is increasingly adopting audio waveforms. As visual cues, they assist in the audio editing process, streamlining operations such as cutting and trimming and optimizing audio levels. This enhancement leads to an improved listening experience for the audience.

With the surge in online video content, adding audio waveform animations to video posts on social media platforms has become a popular trend. These animated waveforms boost engagement, visually appealing to viewers and promoting interaction with the content.

For music producers and sound engineers, audio waveforms are invaluable. They provide a clear snapshot of a track's structure, helping pinpoint specific sections for editing or mastering, thereby facilitating the production of harmonious tunes.

YouTube creators harness the power of audio waveforms to enrich their videos. They improve the video editing workflow and can even serve as a backdrop for an online video, thereby enhancing the overall aesthetics and drawing more viewers to the YouTube channel.

In the field of sound engineering, audio waveforms allow professionals to analyze audio details intricately. They enable the modification and optimization of audio for performance, playing a crucial role in the delivery of high-quality sound.

Why Should You Use Audio Waveform for Your Video?

Incorporating audio waveforms in your videos is not merely a trendy aesthetic choice, but it carries several practical benefits that can enhance your content's value:

The dynamic movement of animated waveforms can intrigue viewers, drawing their attention and fostering interaction with your content. This added dimension can make your video stand out among the static content on the web.

Audio waveforms add a unique visual element to your content. They can transform a standard audio track into an audio waveform animation, heightening the video's overall aesthetic appeal and attracting more views.

Including waveform animations can instill your content with a professional feel, thereby enhancing your brand image and credibility.

Waveforms reveal essential details about the audio content, such as rhythm, dynamics, and amplitude, providing insights that could potentially inform your creative process.

5 Online Audio Waveform Generators to Try

Are you interested in creating stunning and engaging audio waveforms? Here are five online audio waveform generators that you can use to animate your audio:

Kapwing offers a versatile online editor where you can add audio waveforms to any video, free of charge. You can explore different styles, sizes, and colors to generate an audio waveform that complements your content.

WaveVisual presents an opportunity to generate unique sound wave art in seconds. All you need to do is upload your audio or choose a song from their library or Spotify. WaveVisual's sound wave generator will craft beautiful sound waves tailored to your taste.

The Speechify AI Video Editor can take your videos to another level. It incorporates audiograms that represent speech and sounds using waveforms, inviting viewers to listen in. This approach makes your videos more captivating and dynamic, helping you garner more views and engagement on social media platforms like TikTok, Instagram, and Facebook.

Veed.io provides a straightforward and free tool for generating animated sound waves. With a wide array of templates and customization options, you can shape the waveform to match your specific preferences and needs.

Echowave.io emphasizes on the power of audio-waveform animation in driving social media engagement. This platform enables you to add dynamic animations to your videos effortlessly, enhancing the connection between your content and audience.

A Comprehensive Guide for Beginners: Adding Audio Waveforms to Your Videos

Delving into the world of audio waveforms may seem daunting for beginners. Still, with the right tools, guidance, and a little practice, you'll be able to seamlessly integrate audio waveform visuals into your video content. Here's a straightforward step-by-step tutorial to help you through the process, including a specific example using Speechify, an online audio waveform generator.

Start by selecting your audio waveform tool. There are numerous options available online, like Kapwing, veed.io , or Speechify. Evaluate the pricing and features of each tool and choose the one that best aligns with your needs.

Once you've chosen your tool, you need to upload your audio file. Most of these tools support different audio formats, such as WAV and MP3. After uploading, the software will automatically analyze the audio and generate the waveform. Depending on the tool you chose, you should be able to customize the waveform's style, color, and size to match your video's aesthetics. For instance, Speechify allows you to select a waveform style and further customize it.

Adding personal touches to your video can greatly enhance its appeal. Most waveform tools enable you to customize your video by adding text, subtitles, images, or even GIFs. Carefully chosen fonts and a relevant background image can add depth to your video, creating a perfect blend of audio and visuals.

With Speechify, this customization is just a click away. After generating and customizing your waveform, you can add captions and subtitles or insert images and GIFs directly into your video.

The final step in creating your audio waveform video is to preview it, ensuring the waveform aligns correctly with the audio. If everything looks good, save your changes and export the final video. In Speechify, this process is as simple as clicking 'Export'.

Remember, as with learning any new skill, practice is key. So, don't worry if you don't get it right the first time!

The use of audio waveforms can significantly improve the quality and engagement of your audio and video content. Tools like Speechify make the process of generating and integrating waveforms simpler and more accessible, allowing creators to produce visually captivating content. From podcasters to YouTubers, the value of using audio waveforms cannot be understated. Experiment with audio waveforms in your next project and see the difference it makes.

Generating an audio waveform can be achieved by using an audio visualizer or video editing software that supports waveform visualization. These tools interpret your audio file and produce a visual depiction of the sound waves.

Incorporating a waveform into audio can be achieved through a music visualizer or sound wave animation tools. These tools create waveforms grounded on your audio's frequency and amplitude, which can subsequently be overlaid on your audio or video content.

To create a Soundwave QR code, you first need to produce a sound wave from your audio file using a sound wave generator. Once your sound wave is ready, a QR code generator can transform the waveform image into a QR code.

Altering the amplitude of an audio waveform influences the perceived volume of the sound. This adjustment is often made to ensure the audio isn't excessively loud or soft and to preserve a consistent volume level throughout the audio file.

Sound waves are physical waves that journey through a medium, such as air, and are detectable by the human ear. On the other hand, audio waves or audio signals are electronic representations of these sound waves. These can be stored, manipulated, and replayed via electronic devices.

The three fundamental types of waveforms are sine waves, square waves, and triangle waves, each possessing its distinctive shape and properties.

Employing an audio waveform generator can amplify the quality of your audio and video content. It can furnish a visual guide for the audio, assist in aligning audio with visuals, facilitate audio editing, and introduce an attractive visual element to your content.

A waveform represents a sound wave graphically over time, showcasing changes in the sound wave's amplitude. In contrast, a spectrum demonstrates how the energy of the sound wave is distributed across frequencies.

A waveform editor is a software application permitting you to view and manipulate audio waveforms. It provides a variety of editing functions, such as cutting, copying, pasting, trimming, and adjusting volume levels. A waveform editor can also apply effects and filters to enhance or alter the sound.

After Effects provides a powerful tool called the Audio Spectrum Effect. This tool allows you to create dynamic sound wave animations that can be synced with your audio, thereby visualizing the audio spectrum.

Yes, several apps available on iPhone let you add audio waveforms directly to your videos. This enables you to give a professional touch to your audio projects even while on the go.

A music visualizer generates animated imagery in synchronization with a music track. It uses the frequency and amplitude of the music to drive the animation, creating a visually immersive experience for viewers.

Yes, many video editing software can generate waveform visualizations. These visualizations can help with editing tasks by providing a visual reference for the audio content, making it easier to synchronize audio and video content.

How to read the Wings of Fire books in order

Discover the top 10 innovative ways to transform your digital projects with the Speechify Text to Speech API.

Cliff Weitzman

Cliff Weitzman is a dyslexia advocate and the CEO and founder of Speechify, the #1 text-to-speech app in the world, totaling over 100,000 5-star reviews and ranking first place in the App Store for the News & Magazines category. In 2017, Weitzman was named to the Forbes 30 under 30 list for his work making the internet more accessible to people with learning disabilities. Cliff Weitzman has been featured in EdSurge, Inc., PC Mag, Entrepreneur, Mashable, among other leading outlets.

Learnings from the frontlines of music creation.

Proudly brought to you by LANDR

Create, master and release your music in one place.

What is a Spectrogram? The Producer’s Guide to Visual Audio

Have you ever wished you could see audio for a better understanding of your sound?

That’s exactly what’s possible with a spectrogram. It’s a technology that transforms complex audio information into a readable visual form.

But beyond just viewing your sonic information, spectral analysis lets you manipulate sound like never before.

That said, getting started with spectral processing can be challenging if you’ve never used it before.

In this guide, I’ll explain everything you need to know about spectrograms and how you can use them in music production .

From enhancing your audio editing to experimenting with spectral effects, these tools unlock a new way to work with audio.

Let’s get started.

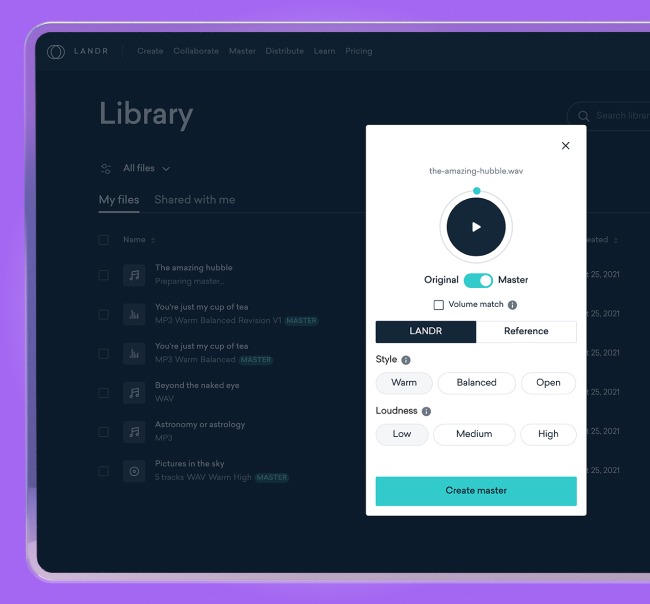

Hear your song mastered

Listen to how your mix would sound fully mastered. LANDR’s AI mastering engine listens to your music to create precise and personalized masters— without any presets.

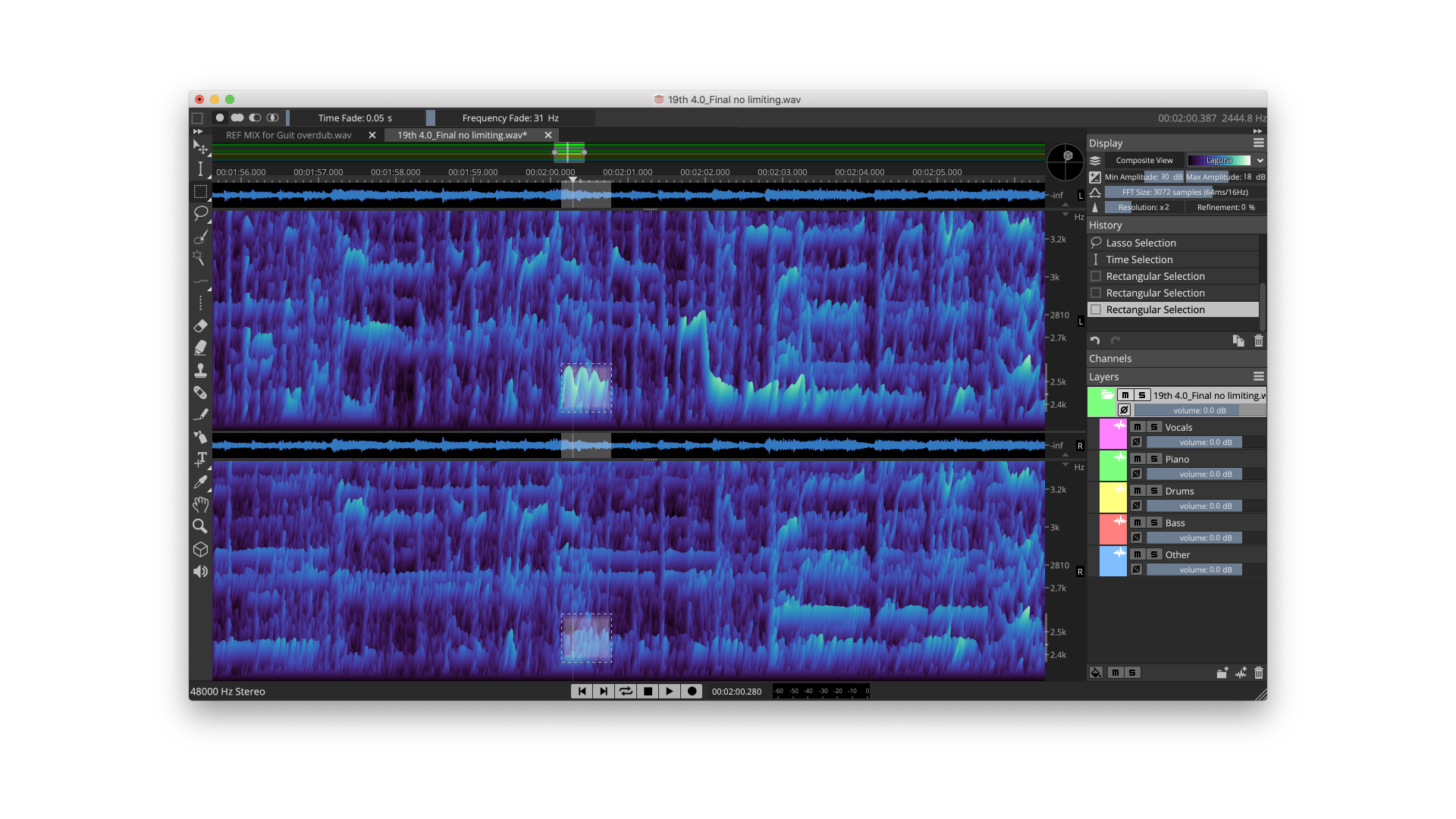

What is a spectrogram?

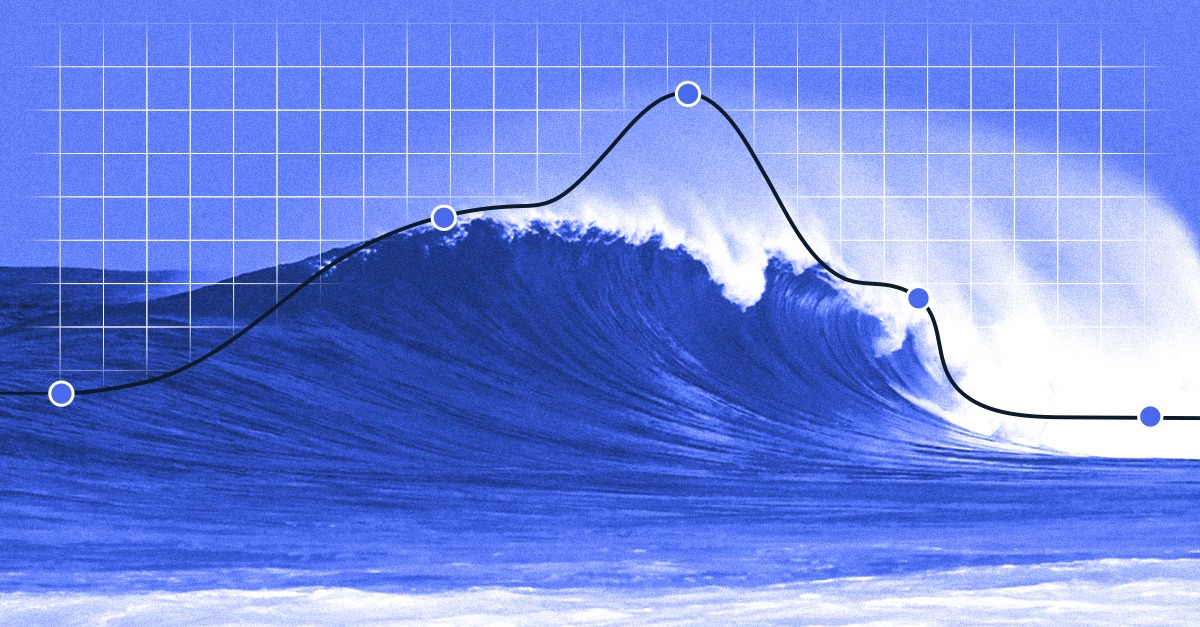

A spectrogram is a visual representation of the spectrum of frequencies in a signal as they vary over time.

They’re displayed as a two-dimensional graph with time on the x-axis and frequency on the y-axis.

The color or intensity of the filled in areas represents the sound’s amplitude. Like an infrared camera, areas with greater amplitude appear more opaque or in a brighter hue.

This method of displaying the sound gives you unique information about its frequency content and temporal evolution.

tools like spectral filtering and morphing open up creative sound design possibilities not possible with other methods.

Despite all that, spectrograms aren’t widely used by emerging producers.

Spectral processing is relatively new and not immediately intuitive for those used to traditional waveform views.

Luckily, there are several options available to producers today for generating spectrograms and working with audio in the frequency domain.

Read - Spectrum Analyzer: How to Visualize Your Signal in Mixing

How can you view a spectrogram?

To view a spectrogram, you’ll need software that can convert traditional audio recordings into visual representations of the frequencies.

This is done using a technique called the Fourier Transform . It breaks down the signal into its individual frequency components, allowing you to see the amplitude of each frequency at specific points in time.

You’ll need an entire audio file to perform traditional spectral analysis, so it’s important to note that this technique cannot be used in real time.

While that might sound complicated, today’s spectrogram software makes it easy.

Tools in your DAW or specialized third-party plugins take care of the technical details so you can focus on using the tools to improve your results.

Deep editing tips

Guides to editing for any situation in music production.

Pitch Correction: How to Edit Vocal Tuning for Perfect Takes

Piano Roll: 9 Ways to Use the MIDI Piano in Your DAW

Best Audio Editing Software 2024

Vocal Editing: How to Edit Perfect Vocals in Your DAW

How to Edit Audio: 10 Helpful Tips for Better Results

MIDI Editing: 6 Essential Tips for Better MIDI Tracks

How can spectrograms help you make better music.

Speaking of which, you’re probably wondering how this advanced technology can actually improve your finished product.

It's hard to know exactly when you've crossed the finish line.

A visual representation of the frequency information in your audio tracks is surprisingly powerful. Combine that with processing that can only take place in the frequency domain and you have some serious audio super powers.

Here are five real world uses for spectrogram tools:

1. Clean up recordings

Spectrograms can help you identify and remove unwanted noises or artifacts, such as hums, clicks, or pops, from your recordings.

By visually locating these sounds in the frequency spectrum, you can use tools like spectral filters or noise reduction to isolate and eliminate them without affecting the rest of the audio.

This is a game changer for any producer that has to deal with noise and artifacts from working in a DIY studio.

2. Unique sound design

Spectral processing allows you to create never before heard sounds by manipulating the frequency content of your audio.

You can stretch, shift, or morph frequencies, combine elements from different sounds, or apply spectral effects like reverb or delay to specific frequency ranges.

This can lead to new and innovative textures that other methods can’t match.

3. Mixing in the frequency domain

Spectrograms can help you make more informed decisions when it comes to your mix. Amplitude hotspots can you clues about problematic areas and concentrations of sonic energy.

You can identify frequency clashes between instruments or vocals, spot elements that need EQ adjustments, or visualize the stereo field to get a more balanced and cohesive sound.

4. Restoration and archiving

Spectral processing has become essential for audio restoration and archiving projects.

Beatmaker Lo-fi

Spectrograms can be used to identify destructive processes like transcoding or even rebuild lost information to improve fidelity.

If you’re a producer that works with lo-fi recordings or vintage samples , this approach can help you integrate found sound and damaged audio back into your work.

5. Enhancing vocals

Vocals are another area where spectrogram editing excels.

Cutting edge tools can remove sibilance, plosives, or breath noises and even enhance specific frequency ranges, making the vocals sit better in the mix.

How to Write, Perform and Produce Studio Vocals

The best audio software for working with spectrograms

With the basics out of the way, here are the best apps for spectral processing in music production:

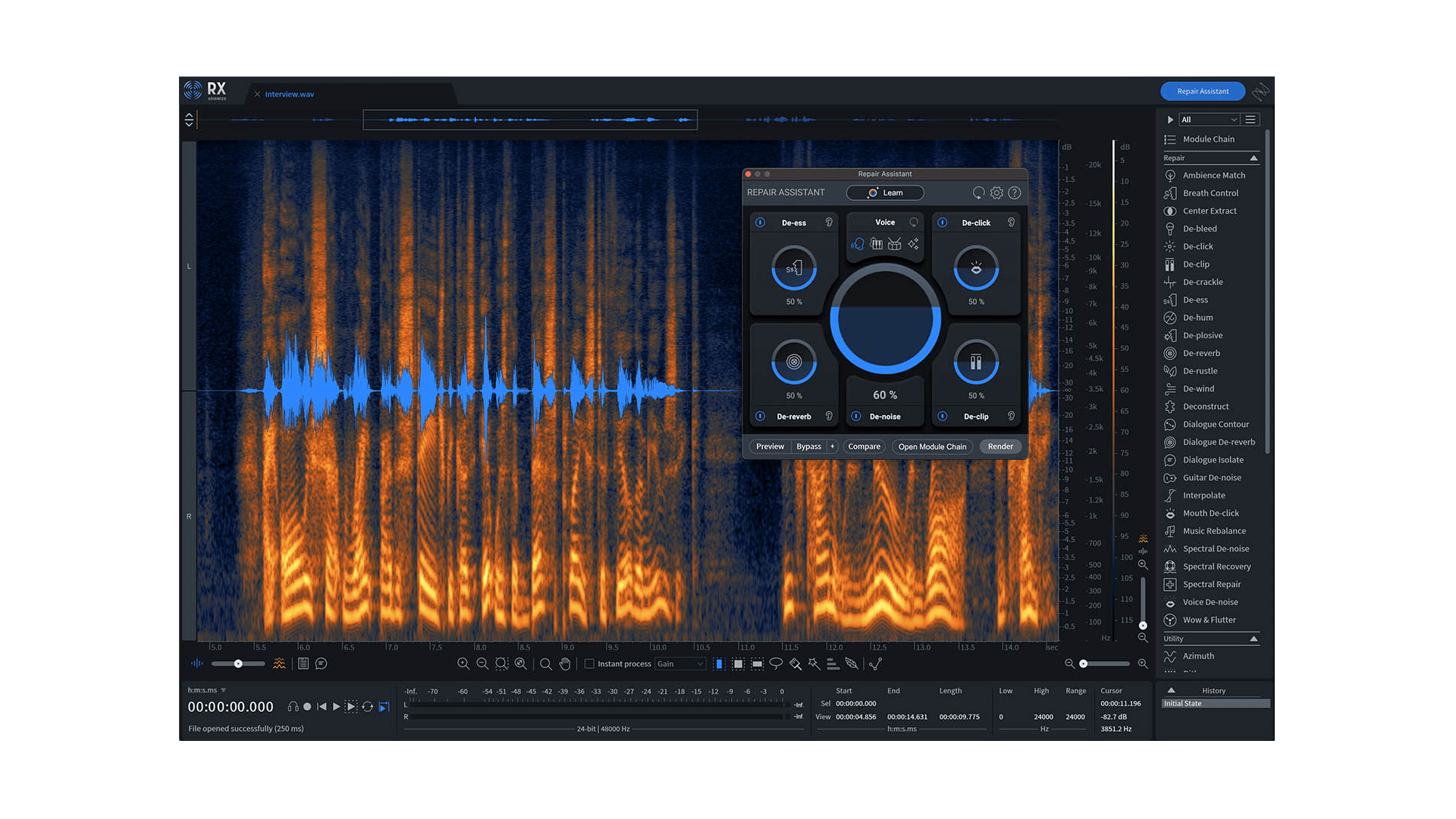

1. iZotope RX

iZotope RX is an audio restoration and spectral editing suite that offers powerful features for working with spectrograms.

RX comes with many different audio tools, but its standout capability is the Spectral Repair module. This lets you easily identify and remove unwanted noises and artifacts by eye.

RX’s other spectrogram tools include a spectral de-esser and advanced de-click and de-reverb modules.

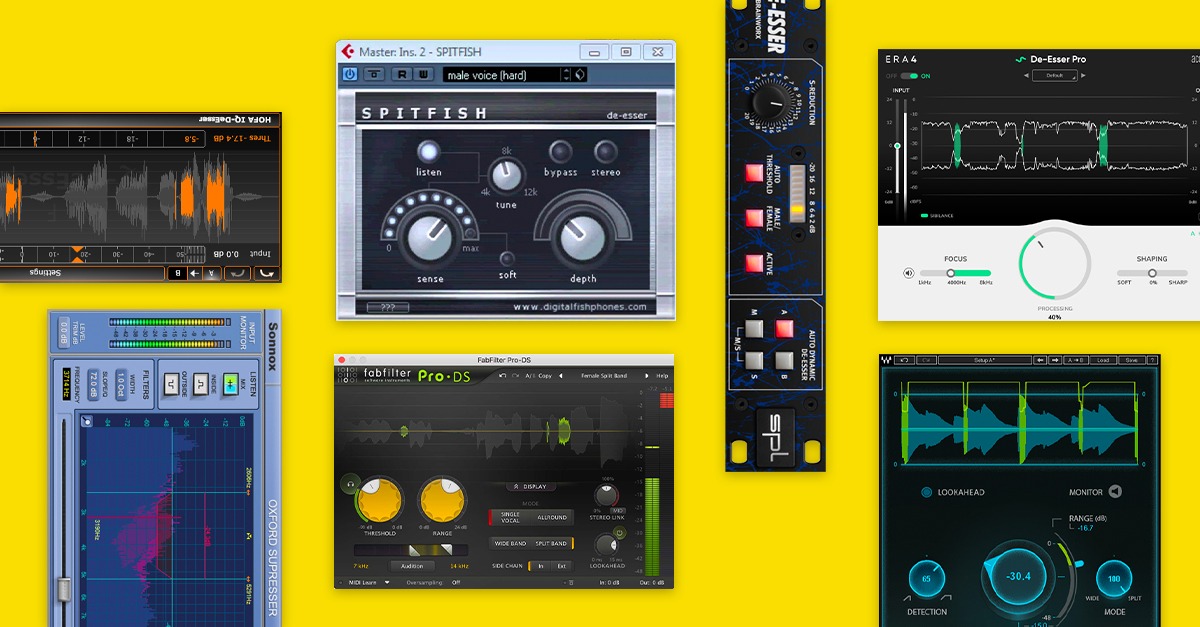

Read - What is a De-Esser? The 8 Best De-Esser VSTs for Pro Vocals

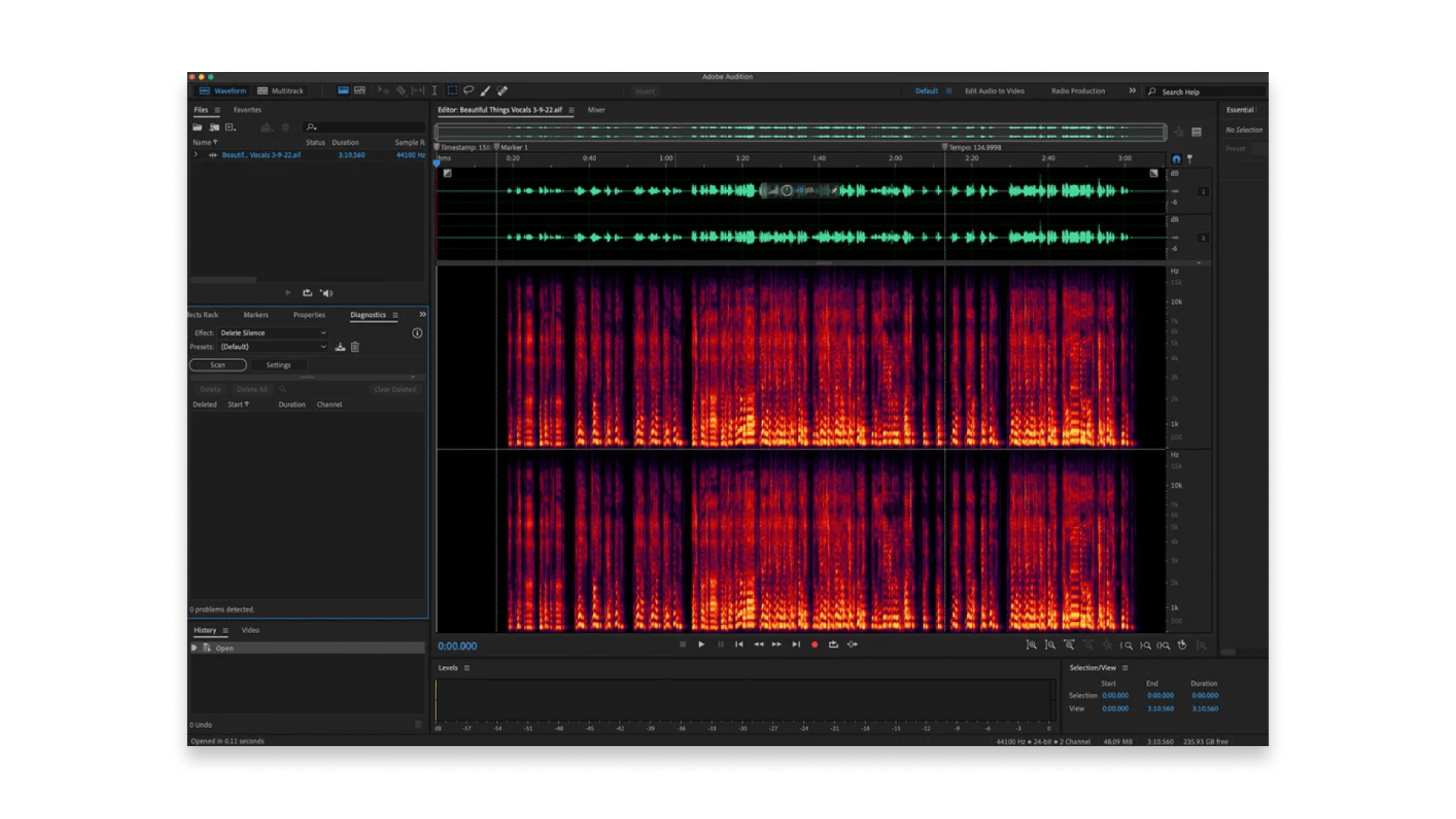

2. Adobe Audition

Adobe’s Audition DAW comes with spectral editing capabilities built in.

Its dedicated Spectral Frequency Display allows you to view and edit the spectral content of any track in your session. On top of that, its built-in spectral effects and noise reduction provide plenty of flexibility for any task.

Audition integrates seamlessly with other Creative Cloud applications, making it an attractive option for users who rely on other Adobe products.

3. Steinberg SpectraLayers Pro

Steinberg’s SpectraLayers Pro is a dedicated spectral editing software with highly advanced technology.

Your pre-mastering guide.

It allows you to work with individual layers of your audio, making it possible to isolate and manipulate specific elements for mixing or sound design .

SpectraLayers Pro goes deep with high tech features spectral editing tools like spectral casting and molding.

This option is especially exciting for producers looking to dive deep into spectral manipulation for creative sound design or detailed audio editing tasks.

4. Audacity

Audacity is a free, open-source DAW that offers basic spectral editing capabilities.

It features a spectrogram view that lets you view and analyze the frequency content. It offers basic spectral editing operations like noise reduction and filtering to clean up issues in your files.

While not as feature-rich or specialized as the other options on this list, Audacity is a budget-friendly choice. Consider it if you just want to explore spectrograms and spectral editing without paying too much.

Spectrogram world

Spectrograms are your visual window into the frequencies that make up your music.

They can up your sound design skills, make your tracks cleaner and fix issues you thought were beyond repair.

Whether you’re removing unwanted noises, enhancing specific elements in a mix, or exploring the realm of spectral sound design, spectrograms are a great tool to add to your toolbox.

Now that you know the basics, go ahead and have fun diving into the world of spectral processing.

Michael Hahn is an engineer and producer at Autoland and member of the swirling indie rock trio Slight.

Gear guides, tips, tutorials, inspiration and more—delivered weekly.

Keep up with the LANDR Blog.

Latest posts

The 10 Best Free Soft Synth VST Plugins

50+ Free Beats: Download Free Instrumentals – All Genres!

How to Make Electronic Music: Starter Guide for Producers

Visualizing Sound: A Beginner’s Guide to Using TouchDesigner with Live

Tags:

- Visuals – View posts with tag: Visuals

An audio wheel by Bileam Tschepe showing a visual representation of the song, "Says" by Nils Frahm

Throughout the history of electronic music, audiovisual art has been integral to its aesthetic identity, both in live performance settings and more recently online. As a result, ever more music makers are incorporating visual media into their work, leading to a new generation of artists who continue to push the boundaries of music’s visual representation.

While there are many different ways to create audiovisual art, we’ve been hearing from a growing number of people who’ve been turning to the node-based programming language TouchDesigner to augment their music with real-time visuals and other media-based works. Developed by the Toronto-based company Derivative, TouchDesigner was created primarily for artists who want to work with image, light and sound. The application is typically used to craft live performances, installations, music visuals and other multi-sensory experiences — although use cases extend far beyond creative arts. Whether it's through projection mapping, virtual reality, or physical computing, TouchDesigner helps people bring their ideas to life in numerous ways.

In this beginner's guide, we'll introduce you to TouchDesigner and explore some of the ways in which you can use the platform to create various kinds of audiovisual art. Along the way, we'll be joined by Derivative’s co-founder Greg Hermanovic and Berlin-based A/V artists Bileam Tschepe and Ioana Bîlea. We’re grateful to them for talking with us and sharing their expertise and insights into the history of TouchDesigner, the available resources for learning the platform, and its creative potential when used with Live.

Visuals for Joachim Spieth's Terrain album, by Stanislav Glazov

Greg Hermanovic

From early on in his working life, Greg Hermanovic was always drawn to the idea of creating interactive tools for artists. Prior to his career in graphic software, he worked in aerospace, where he developed real-time simulators for pilot training and for the US Space Shuttle robot arm. Hermanovic later co-founded Side Effects Software, the makers of Houdini , and received two Academy Awards for his work in procedural visual effects. In 2000, he branched off to start Derivative, where he currently leads the team that makes TouchDesigner.

“A lot of the roots of TouchDesigner are in modular and analog synthesizers,” Hermanovic says. “The first synths I got to put my hands on were the EMS Synthi , the Arp 2600 , and a bit of Moog. And at the same time, I was really interested in experimental films like “Synchromy” by Norman McClaren . At the time McClaren was creating soundtracks from images drawn directly onto film. So the soundtrack was the image and the image was the soundtrack. This really excited me because I realized, OK, here’s a marriage of the two.”

Elekktronaut

Bileam Tschepe - also known as Elekktronaut - uses TouchDesigner to create interactive, organic, audiovisual art, as well as installations and live performances. He also hosts TouchDesigner workshops and meetups at Music Hackspace and has a YouTube channel featuring easy-to-follow TouchDesigner tutorials. Tschepe describes how he sees images, forms, and colors when he listens to music. “It's very abstract,” he says. “I can’t really explain it. It can be really hard to realize what I see. But, I think TouchDesigner is the perfect tool to do it.”

Visuals to Marlon Hoffstadt’s “Mantra Of A New Life,” by Bileam Tschepe

Spherical Aberration

For Ioana Bîlea, aka Spherical Aberration, getting into audiovisual arts came out of frustration. “I was coordinating a music festival in Romania,” she remembers. “But I always wanted to make music. It wasn't until I moved to Berlin, however, that I found a community who were really supporting female artists. I found the VJ community was generally more open though, so I eventually started VJing with Resolume. But that got boring for me, especially for bigger events where I would have to be there all night. I was looking for a tool that was more entertaining and fun. One night a friend showed me TouchDesigner and I immediately realized that's what I was looking for.”

An audiovisual collaboration between Spherical Aberration and Camilla Pisani

Artists working with TouchDesigner

Throughout his career in visual effects, Hermanovic has always been inspired and influenced by music culture. In the mid-'90s, he regularly moonlighted as an audiovisual artist for some of Toronto’s early rave parties. These experiences would ultimately contribute to shaping and inspiring many of the features we see in TouchDesigner today. “I was doing visuals voluntarily for this rave company called Chemistry,” he recalls. “I remember trying to project pictures onto the wall in real-time while doing live editing, live coding, and all that kind of stuff. At one event, I was typing in this super long expression of code, trying to get something to wobble on the screen with some decay and stuff like that. And I thought this was insane. I was trying to type parenthesis, going nuts, during a performance, with people stumbling, and dropping their drinks on my keyboard. And so that was the moment I said, OK, we need to put a new system of Operators into our software which we called CHOPS . So CHOPS were born through a lot of pain and inconvenience”.

In the late ‘90s, a fortuitous encounter between Hermanovic and Richie Hawtin paved the way for their first audiovisual collaboration at the Mutek festival in Montreal. At the time Hawtin was using an early version of Ableton Live with a custom MIDI controller, while at Derivative, Hermanovic and his team were working on early builds of TouchDesigner. “Richie and I pushed the technologies together and made a couple of songs,” remembers Hermanovic. “Some worked well, others didn’t. Later, we did the Plastikman 2010 tour with a lot more time, better organization, and technology behind us.”

Richie Hawtin presents Plastikman live, Timewarp 2010

Since the days of Hermanovic and Hawtin’s pioneering collaborations the number of people using TouchDesigner to combine audio, video, graphics, and data has grown exponentially worldwide. Some notable names using the program include Christopher Bauder , Ben Heim , CLAUDE , Markus Heckmann , and Carsten Nicolai aka Alva Noto. These artists have used TouchDesigner to create a wide range of projects. Many of them also use TouchDesigner as a platform for prototyping and developing new ideas.

“Christopher Bauder’s studio used TouchDesigner for a collaboration with Robert Henke called DEEP WEB ,” remembers Bîlea. “They had a giant audiovisual installation at Kraftwerk in Berlin. And there are people like 404zero who use TouchDesigner to generate controlling signals for a modular synth setup. And there’s NONOTAK Studio , who use it to control their light installations with sound.”

Below is a YouTube playlist featuring the work of various artists using TouchDesigner. This playlist showcases a range of creative possibilities which may spark inspiration for those interested in using the platform in their own work.

Learning TouchDesigner

TouchDesigner, like any programming language, has a learning curve, but those with experience in synthesis, Live, or Max/MSP may find some familiar concepts. There are many online resources available to help individuals at any level of experience learn TouchDesigner. “You’ve got to start somewhere and get your feet wet,” Hermanovic advises. “So try something easy first. Then you’ll get instant results which will motivate you to keep building.”

Music Hackspace , in partnership with Derivative, offers a free online course for those just getting started with TouchDesigner. In addition, they hold monthly meetups featuring presentations and discussions on TouchDesigner projects. “The course is designed for beginners or people who’ve never programmed before,” says Tschepe. “Everyone has to learn the same basics at first. Then you can branch out and focus on different parts that interest you. At our meetups, there's always a chance for a Q&A followed by an open discussion. And usually, someone from Derivative joins.”

“One of the things I really like about Music Hackspace meetups and other events we’re at is the opportunity to watch people use our software over their shoulders,” says Hermanovic. “Because quite often what our users really need isn’t apparent from what they say. Also, our users often miss the new tricks and shortcuts we add to TouchDesigner. So for us, the meetups provide good lessons because we can learn how to make new features more apparent to people”.

TouchDesigner also has a thriving community of users who share inspiration and know-how via Facebook groups , the Derivative forum and Discord servers . The community is where users who have skills can connect with those who either need to learn or want to find others to collaborate with.

“It was rough at first because there were no tutorials and the community was super small,” says Bîlea. “But we had a Facebook group and a GitHub page. The people on Derivative’s forum were so nice. You could ask a question, and someone would always jump in and try to help you. Aurelian Ionus aka Paketa 12 has been one of the main contributors to the community. We’ve all been inspired by him. He gives good advice and a lot of it for free. I don't think I would have gotten to where I am now without his coaching.”

“When I started there weren't that many tutorials and no beginner courses,” remembers Tschepe. “Without Mathew Ragan , I wouldn't have known where to begin. There are a few of his university classes on YouTube . These videos were definitely helpful for me when starting out.”

TouchDesigner has a free non-commercial license for users who are getting started. While the non-commercial license limits the resolution, all basic operations and functions remain accessible for those wanting to use it for their personal learning and non-paying projects.

“We really wanted to get TouchDesigner in the hands of as many people as possible so they could see if they like it or not,” Hermanovic mentions. “And we wanted to give access in countries where money is harder to come by, so there’s affordability. People can then make some art that is lower resolution but not watermarked. Some projects you can even do end to end, non-commercially without any limitations really.”

Useful links on getting started with TouchDesigner

Download TouchDesigner

Derivative’s official Learn TouchDesigner help page

TouchDesigner Beginner Course, by Bilieam Tschepe

Mathew Ragan Teaching Resources

A list of all TouchDesigner tutorials out there

Joy Division in TouchDesigner, with Paketa 12

Understanding Operators

Operators in TouchDesigner can be thought of as the building blocks or ingredients that make up your project. They are essentially small nodes that perform specific tasks, such as generating images, processing audio, or controlling data. You can use these Operators by themselves or combine them in different ways to create more complex behaviors and effects. To help explain the concept further, Hermanovic likens TouchDesigner’s family of Operators to the Periodic Table of elements. “Imagine every element does something different, there's no commonality,” he says. “And the elements combine to form molecules. And the molecules are these powerful things that form life. That's how I wanted TouchDesigner to work at the basic level. All of the Operators are bite-sized enough that each one has a good job, and can do lots of interesting things without being overloaded with features.”

TouchDesigner has a collection of sixteen Operators that are considered to be the most fundamental and essential for building projects. These are referred to as the “ Sweet 16 ”. “They are the most popular and useful Operators which everyone should get to know,” Hermanovic suggests.

TouchDesigner also features a Palette Browser with pre-constructed networks of Operators, called Components. Beginners can quickly get started by dragging and dropping these Components into their projects. Additional Components can be downloaded from AlphaMoonbase's OLIB library page and ChopChopChop.org .

Operator Families, with Bileam Tschepe

For those not wanting to dive too deeply into node-based programming, Tschepe is currently developing Algorhythm , a real-time interface for TouchDesigner that allows users to easily play and manipulate generative visuals and media using audio and other inputs. Algorhythm allows users to fade between visuals and add post-effects without needing to program large networks of Operators. It is currently available through Tschepe's Patreon .

Making audio-reactive visuals with TouchDesigner and Ableton Live

There are several ways that TouchDesigner can be integrated with Live to create audio-reactive visuals and other kinds of interactive experiences. One approach is to use TouchDesigner to control audio and video clips in Live. This can be done by sending OSC (Open Sound Control) messages from TouchDesigner to Live. Another method is to use TouchDesigner to visualize audio data in real-time, such as waveforms or spectrums, using the audio input from Live. This can be done using TouchDesigner's audio analysis tools which convert sound frequencies into numerical data. The data can then be connected to any number of TouchDesigner’s Operators opening up infinite possibilities for real-time music visualization.

TouchDesigner's Ableton Link CHOP can also sync Live Sets using timing information on a Link-enabled network. You can take it further with the TDAbleton tool which offers tight, two-way integration and full access to almost everything going on in a Live Set. The recently released TDAbleton 2.0 update also includes access to Clip information in Live 11 arrangements and allows users to trigger Macro Variations and access all 16 Rack Marcos.

Alternatively, OSC signals can be sent between Live and TouchDesigner using the LiveGrabber Max for Live plugins.

“I’m happy there are great audio tools already out there through Ableton, VST and stuff like that,” says Hermanovic. “So we don’t really have to go there. But just being on the periphery of audio and being able to talk two ways with it is really good. I’m excited about the development of TDAbleton. The feedback I’m getting is that its link is pretty tight, flexible and open”.

Useful links on audio-reactive visuals and TouchDesigner’s integration with Live

Ableton & TouchDesigner: How to Build an Audio & Visual Live Set, with CLAUDE

Audio-Reactive Visuals in TouchDesigner, with Torin Blankensmith

Make Anything Audio Reactive, with Bileam Tschepe

TDAbleton Tutorials, with Bileam Tschepe

Beat Detection Tutorial, with Bileam Tschepe

Audio Reactive Particle Cloud Tutorial, with Bileam Tschepe

Audio Reactive articles on Derivative.ca

‘Bouncing liquid’, created using Live and TouchDesigner

Choosing the right computer

When choosing a computer for use with TouchDesigner, it is advisable to opt for the most powerful configuration that your budget allows. This will provide the best performance and enable you to tackle more complex projects. Factors to consider when determining the spec of your computer include:

Processor: TouchDesigner can be a CPU-intensive application, so it's important to have a powerful processor. Look for a processor with a high number of cores and a high clock speed.

Graphics card: TouchDesigner is also graphics-intensive, so a good graphics card is important. Look for a card with a high amount of dedicated video memory and support for GPU acceleration. It’s also worth noting, certain TouchDesigner features will only work with Nvidia GPUs.

Memory: TouchDesigner benefits from a lot of memory, so look for a computer with at least 16GB of RAM.

Storage: TouchDesigner files can be quite large, so it's important to have a fast and large hard drive or SSD to store them on.

Portability: If you need to take your computer with you on the go, you'll want to consider its size and weight.

“Technically for the basic stuff, you don't really need a good computer,” says Tschepe. “But, if you’re doing some high-end 3D work you’re going to have issues. You’ll need a really powerful computer. Or if you want to render an 8K live projection, obviously you have to get a really good graphics card.”

“I bought a gaming laptop,” says Bîlea. “When I bought it three years ago it was one of the most powerful of its kind. Now it’s already falling behind. But it’s still working well for what I need. The Nvidia graphics cards upgrade pretty fast though. You could change your laptop every year if you wanted.”

“At one time I had to ask Silicon Graphics to borrow one of their workstations,” Hermanovic reminisces. “It took four people to move, they were so heavy. They cost $250,000. There were these poor guys pulling it up stairways into the space. Fortunately, the affordability of hardware has come down a lot. We’re piggybacking off the gaming industry because it’s driven the price of graphic chips right down.”

Finding inspiration

There are many ways to find inspiration for audiovisual projects in TouchDesigner. One way is to attend events such as music festivals, and conferences to see what other people are doing with audiovisual technology. You can also look at the portfolios of other artists and designers to see what they have created. And of course, looking to other art forms and occasionally stepping away from the computer and into the world can provide endless ideas and inspiration.

“Personally I think I pick ideas more from non-TouchDesigner-related things,” says Hermanovic. “There’s so much amazing art in many other fields spanning hundreds of years. I’m influenced by painting and photography from way back. I really liked going to NYC in the ‘70s because of the explosion in the Soho area of art galleries and large-format art and installations. That was a hotbed of creativity back then.”

“My visuals are inspired by nature and organic textures,” says Bîlea. “Everything is evolving and slow-moving. I don't usually work with sharp edges or bright colors. I think my music has the same source of inspiration, so it's very ambient.”

“I also like to recreate things I see in nature,” says Tschepe. “I can be inspired by anything really. Anywhere I see natural patterns. It could even be the inside of a coffee mug.”

Forms in Wood, by Bileam Tschepe

We hope that this beginner’s guide has given you a taste of the creative possibilities of using TouchDesigner together with Live and some ideas on where to start. Whether you're an experienced A/V artist looking to expand your skills, or a newcomer to the field looking to explore the intersections of sound, music, and visuals, TouchDesigner offers a wealth of opportunities for exploration and expression. There are no strict rules for using these tools, and the only limit is your imagination. We look forward to hearing – and seeing – what you come up with.

Keep up with Elekktronaut and Spherical Aberration . Follow Derivative on YouTube

Text and interviews by Joseph Joyce and Phelan Kane

Artwork by Bileam Tschepe (Elekktronaut)

View posts in category: Artists

Paradox: breakbeat mastery, extending live: how three different artists approach visuals for live performance, view posts in category: loop, a/v interchange: performing music with visuals.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

A curated list of audio-visual learning methods and datasets.

GeWu-Lab/awesome-audiovisual-learning

Folders and files, repository files navigation.

This is a curated list of audio-visual learning methods and datasets, based on our survey: <Learning in Audio-visual Context: A Review, Analysis, and New Perspective>. This list will continue to be updated, please feel free to nominate good related works with Pull Requests!

[Website of Our Survey] , [arXiv]

Table of contents

Speech recognition, speaker recognition, action recognition, emotion recognition, speech enhancement and separation, object sound separation, face super-resolution and reconstruction, natural sound, spatial sound generation, talking face, image manipulation, depth estimation, audio-visual transfer learning, cross-modal retrieval, audio-visual representation learning, sound localization in videos, audio-visual saliency detection, audio-visual navigation, localization, question answering, audio-visual boosting, audio-visual recognition.

[Applied Intelligence-2015] Audio-visual Speech Recognition Using Deep Learning Authors: Kuniaki Noda, Yuki Yamaguchi, Kazuhiro Nakadai, Hiroshi G. Okuno, Tetsuya Ogata Institution: Waseda University; Kyoto University; Honda Research Institute Japan Co., Ltd.

[CVPR-2016] Temporal Multimodal Learning in Audiovisual Speech Recognition Authors: Di Hu, Xuelong Li, Xiaoqiang Lu Institution: Northwestern Polytechnical University; Chinese Academy of Sciences

[AVSP-2017] End-To-End Audiovisual Fusion With LSTMs Authors: Stavros Petridis, Yujiang Wang, Zuwei Li, Maja Pantic Institution: Imperial College London; University of Twente

[IEEE TPAMI-2018] Deep Audio-visual Speech Recognition Authors: Triantafyllos Afouras, Joon Son Chung, Andrew Senior, Oriol Vinyals, Andrew Zisserman Institution: University of Oxford; Google Inc.

[2019] Explicit Sparse Transformer: Concentrated Attention Through Explicit Selection Authors: Guangxiang Zhao, Junyang Lin, Zhiyuan Zhang, Xuancheng Ren, Qi Su, Xu Sun Institution: Peking University

[IEEE TNNLS-2022] Multimodal Sparse Transformer Network for Audio-visual Speech Recognition Authors: Qiya Song, Bin Sun, Shutao Li Institution: Hunan University

[Interspeech-2022] Robust Self-Supervised Audio-visual Speech Recognition Authors: Bowen Shi, Wei-Ning Hsu, Abdelrahman Mohamed Institution: Toyota Technological Institute at Chicago; Meta AI

[2022] Bayesian Neural Network Language Modeling for Speech Recognition Authors: Boyang Xue, Shoukang Hu, Junhao Xu, Mengzhe Geng, Xunying Liu, Helen Meng Institution: the Chinese University of Hong Kong

[Interspeech-2022] Visual Context-driven Audio Feature Enhancement for Robust End-to-End Audio-Visual Speech Recognition Authors: Joanna Hong, Minsu Kim, Daehun Yoo, Yong Man Ro Institution: Korea Advanced Institute of Science and Technology; Genesis Lab Inc.

[MLSP-2022] Rethinking Audio-visual Synchronization for Active Speaker Detection Authors: Abudukelimu Wuerkaixi, You Zhang, Zhiyao Duan, Changshui Zhang Institution: Tsinghua University; Beijing National Research Center for Information Science and Technology; University of Rochester

[NeurIPS-2022] A Single Self-Supervised Model for Many Speech Modalities Enables Zero-Shot Modality Transfer Authors: Wei-Ning Hsu, Bowen Shi Institution: Toyota Technological Institute at Chicago

[ITOEC-2022] FSMS: An Enhanced Polynomial Sampling Fusion Method for Audio-Visual Speech Recognition Authors: Chenghan Li; Yuxin Zhang; Huaichang Du Institution: Communication University of China

[IJCNN-2022] Continuous Phoneme Recognition based on Audio-Visual Modality Fusion Authors: Julius Richter; Jeanine Liebold; Timo Gerkamnn Institution: Universität Hamburg

[ICIP-2022] Learning Contextually Fused Audio-Visual Representations For Audio-Visual Speech Recognition Authors: Zi-Qiang Zhang, Jie Zhang, Jian-Shu Zhang, Ming-Hui Wu, Xin Fang, Li-Rong Dai Institution: University of Science and Technology of China; Chinese Academy of Sciences; iFLYTEK Co., Ltd.

[ICASSP-2023] Self-Supervised Audio-Visual Speech Representations Learning By Multimodal Self-Distillation Authors: Jing-Xuan Zhang, Genshun Wan, Zhen-Hua Ling, Jia Pan, Jianqing Gao, Cong Liu Institution: University of Science and Technology of China; iFLYTEK Co. Ltd.

[CVPR-2022] Improving Multimodal Speech Recognition by Data Augmentation and Speech Representations Authors: Dan Oneaţă, Horia Cucu Institution: University POLITEHNICA of Bucharest

[AAAI-2022] Distinguishing Homophenes Using Multi-Head Visual-Audio Memory for Lip Reading Authors: Minsu Kim, Jeong Hun Yeo, Yong Man Ro Institution: Korea Advanced Institute of Science and Technology

[AAAI-2023] Leveraging Modality-specific Representations for Audio-visual Speech Recognition via Reinforcement Learning Authors: Chen Chen, Yuchen Hu, Qiang Zhang, Heqing Zou, Beier Zhu, Eng Siong Chng Institution: Nanyang Technological University; ZJU-Hangzhou Global Scientific and Technological Innovation Center; Zhejiang University

[WACV-2023] Audio-Visual Efficient Conformer for Robust Speech Recognition Authors: Maxime Burchi, Radu Timofte Institution: University of Würzburg

[2023] Prompt Tuning of Deep Neural Networks for Speaker-adaptive Visual Speech Recognition Authors: Minsu Kim, Hyung-Il Kim, Yong Man Ro Institution: Korea Advanced Institute of Science and Technology; Electronics and Telecommunications Research Institute

[2023] Multimodal Speech Recognition for Language-Guided Embodied Agents Authors: Allen Chang, Xiaoyuan Zhu, Aarav Monga, Seoho Ahn, Tejas Srinivasan, Jesse Thomason Institution: University of Southern California

[2023] MuAViC: A Multilingual Audio-Visual Corpus for Robust Speech Recognition and Robust Speech-to-Text Translation Authors: Mohamed Anwar, Bowen Shi, Vedanuj Goswami, Wei-Ning Hsu, Juan Pino, Changhan Wang Institution: Meta AI

[ICASSP-2023] The NPU-ASLP System for Audio-Visual Speech Recognition in MISP 2022 Challenge Authors: Pengcheng Guo, He Wang, Bingshen Mu, Ao Zhang, Peikun Chen Institution: Northwestern Polytechnical University

[CVPR-2023] Watch or Listen: Robust Audio-Visual Speech Recognition with Visual Corruption Modeling and Reliability Scoring Authors: Joanna Hong, Minsu Kim, Jeongsoo Choi, Yong Man Ro Institution: Korea Advanced Institute of Science and Technology

[ICASSP-2023] Auto-AVSR: Audio-Visual Speech Recognition with Automatic Labels Authors: Pingchuan Ma, Alexandros Haliassos, Adriana Fernandez-Lopez, Honglie Chen, Stavros Petridis, Maja Pantic Institution: Imperial College London; Meta AI

[CVPR-2023] AVFormer: Injecting Vision into Frozen Speech Models for Zero-Shot AV-ASR Authors: Paul Hongsuck Seo, Arsha Nagrani, Cordelia Schmid Institution: Google Research

[CVPR-2023] SynthVSR: Scaling Up Visual Speech Recognition With Synthetic Supervision Authors: Xubo Liu, Egor Lakomkin, Konstantinos Vougioukas, Pingchuan Ma, Honglie Chen, Ruiming Xie, Morrie Doulaty, Niko Moritz, Jáchym Kolář, Stavros Petridis, Maja Pantic, Christian Fuegen Institution: University of Surrey; Meta AI

[ICASSP-2023] Multi-Temporal Lip-Audio Memory for Visual Speech Recognition Authors: Jeong Hun Yeo, Minsu Kim, Yong Man Ro Institution: Korea Advanced Institute of Science and Technology

[ICASSP-2023] On the Role of LIP Articulation in Visual Speech Perception Authors: Zakaria Aldeneh, Masha Fedzechkina, Skyler Seto, Katherine Metcalf, Miguel Sarabia, Nicholas Apostoloff, Barry-John Theobald Institution: Apple Inc.

[ICASSP-2023] Practice of the Conformer Enhanced Audio-Visual Hubert on Mandarin and English Authors: Xiaoming Ren, Chao Li, Shenjian Wang, Biao Li Institution: Beijing OPPO Telecommunications Corp., ltd.

[ICASSP-2023] Robust Audio-Visual ASR with Unified Cross-Modal Attention Authors: Jiahong Li, Chenda Li, Yifei Wu, Yanmin Qian Institution: Shanghai Jiao Tong University

[IJCAI-2023] Cross-Modal Global Interaction and Local Alignment for Audio-Visual Speech Recognition Authors: Yuchen Hu, Ruizhe Li, Chen Chen, Heqing Zou, Qiushi Zhu, Eng Siong Chng Institution: Nanyang Technological University; University of Aberdeen; University of Science and Technology of China

[Interspeech-2023] Prompting the Hidden Talent of Web-Scale Speech Models for Zero-Shot Task Generalization Authors: Puyuan Peng, Brian Yan, Shinji Watanabe, David Harwath Institution: The University of Texas at Austin; Carnegie Mellon University

[Interspeech-2023] Improving the Gap in Visual Speech Recognition Between Normal and Silent Speech Based on Metric Learning Authors: Sara Kashiwagi, Keitaro Tanaka, Qi Feng, Shigeo Morishima Institution: Waseda University; Waseda Research Institute for Science and Engineering

[ACL-2023] AV-TranSpeech: Audio-Visual Robust Speech-to-Speech Translation Authors: Rongjie Huang, Huadai Liu, Xize Cheng, Yi Ren, Linjun Li, Zhenhui Ye, Jinzheng He, Lichao Zhang, Jinglin Liu, Xiang Yin, Zhou Zhao Institution: Zhejiang University; ByteDance

[ACL-2023] Hearing Lips in Noise: Universal Viseme-Phoneme Mapping and Transfer for Robust Audio-Visual Speech Recognition Authors: Yuchen Hu, Ruizhe Li, Chen Chen, Chengwei Qin, Qiushi Zhu, Eng Siong Chng Institution: Nanyang Technological University; University of Aberdeen; University of Science and Technology of China

[ACL-2023] MIR-GAN: Refining Frame-Level Modality-Invariant Representations with Adversarial Network for Audio-Visual Speech Recognition Authors: Yuchen Hu, Chen Chen, Ruizhe Li, Heqing Zou, Eng Siong Chng Institution: Nanyang Technological University; University of Aberdeen

[IJCNN-2023] Exploiting Deep Learning for Sentence-Level Lipreading Authors: Isabella Wu, Xin Wang Institution: Choate Rosemary Hall; Stony Brook University

[IJCNN-2023] GLSI Texture Descriptor Based on Complex Networks for Music Genre Classification Authors: Andrés Eduardo, Coca Salazar Institution: Federal University of Technology - Paraná

[ICME-2023] Improving Audio-Visual Speech Recognition by Lip-Subword Correlation Based Visual Pre-training and Cross-Modal Fusion Encoder Authors: Yusheng Dai, Hang Chen, Jun Du, Xiaofei Ding, Ning Ding, Feijun Jiang, Chin-Hui Lee Institution: University of Science and Technology of China; Alibaba Group; Georgia Institute of Technology

[ICME-2023] Multi-Scale Hybrid Fusion Network for Mandarin Audio-Visual Speech Recognition Authors: Jinxin Wang, Zhongwen Guo, Chao Yang, Xiaomei Li, Ziyuan Cui Institution: Ocean University of China; University of Technology Sydney

[AAAI-2024] Multichannel AV-wav2vec2: A Framework for Learning Multichannel Multi-Modal Speech Representation Authors: Qiushi Zhu, Jie Zhang, Yu Gu, Yuchen Hu, Lirong Dai Institution: NERC-SLIP, University of Science and Technology of China (USTC), Hefei, China; Tencent AI LAB; Nanyang Technological University, Singapore

[CVPR-2024] A Study of Dropout-Induced Modality Bias on Robustness to Missing Video Frames for Audio-Visual Speech Recognition Authors: Yusheng Dai, Hang Chen, Jun Du, Ruoyu Wang, Shihao Chen, Jiefeng Ma, Haotian Wang, Chin-Hui Lee Institution: University of Science and Technology of China, Hefei, China; Georgia Institute of Technology, Atlanta, America

[IJCNN-2024] Target Speech Extraction with Pre-trained AV-HuBERT and Mask-And-Recover Strategy Authors: Wenxuan Wu, Xueyuan Chen, Xixin Wu, Haizhou Li, Helen Meng Institution: The Chinese University of Hong Kong

[InterSpeech-2024] LipGER: Visually-Conditioned Generative Error Correction for Robust Automatic Speech Recognition Authors: Sreyan Ghosh, Sonal Kumar, Ashish Seth, Purva Chiniya, Utkarsh Tyagi, Ramani Duraiswami, Dinesh Manocha Institution: University of Maryland, College Park, USA

[InterSpeech-2024] Whisper-Flamingo: Integrating Visual Features into Whisper for Audio-Visual Speech Recognition and Translation Authors: Andrew Rouditchenko, Yuan Gong, Samuel Thomas, Leonid Karlinsky, Hilde Kuehne, Rogerio Feris, James Glass Institution: MIT, USA; IBMResearch AI, USA; MIT-IBM Watson AI Lab, USA; University of Bonn, Germany

[MTA-2016] Audio-visual Speaker Diarization Using Fisher Linear Semi-discriminant Analysis Authors: Nikolaos Sarafianos, Theodoros Giannakopoulos, Sergios Petridis Institution: National Center for Scientific Research “Demokritos”

[ICASSP-2018] Audio-visual Person Recognition in Multimedia Data From the Iarpa Janus Program Authors: Gregory Sell, Kevin Duh, David Snyder, Dave Etter, Daniel Garcia-Romero Institution: The Johns Hopkins University

[ICASSP-2019] Noise-tolerant Audio-visual Online Person Verification Using an Attention-based Neural Network Fusion Authors: Suwon Shon, Tae-Hyun Oh, James Glass Institution: MIT Computer Science and Artificial Intelligence Laboratory, Cambridge

[Interspeech-2019] Who Said That?: Audio-visual Speaker Diarisation Of Real-World Meetings Authors: Joon Son Chung, Bong-Jin Lee, Icksang Han Institution: Naver Corporation

[ICASSP-2020] Self-Supervised Learning for Audio-visual Speaker Diarization Authors: Yifan Ding, Yong Xu, Shi-Xiong Zhang, Yahuan Cong, Liqiang Wang Institution: University of Central Florida; Tencent AI Lab; Beijing University of Posts and Telecommunications

[ICASSP-2021] A Multi-View Approach to Audio-visual Speaker Verification Authors: Leda Sari, Kritika Singh, Jiatong Zhou, Lorenzo Torresani, Nayan Singhal, Yatharth Saraf Institution: University of Illinois at Urbana-Champaign, Facebook AI Research

[IEEE/ACM TASLP-2021] Audio-visual Deep Neural Network for Robust Person Verification Authors: Yanmin Qian, Zhengyang Chen, Shuai Wang Institution: Shanghai Jiao Tong University

[ICDIP 2022] End-To-End Audiovisual Feature Fusion for Active Speaker Detection Authors: Fiseha B. Tesema, Zheyuan Lin, Shiqiang Zhu, Wei Song, Jason Gu, Hong Wu Institution: Interdisciplinary Innovation Research Institute, Zhejiang Lab; Dalhousie University; University of Electronic Science and Technology of China; Zhejiang University

[EUVIP-2022] Active Speaker Recognition using Cross Attention Audio-Video Fusion Authors: Bogdan Mocanu, Tapu Ruxandra Institution: University "Politehnica" of Bucharest; Télécom SudParis

[2022] Audio-Visual Activity Guided Cross-Modal Identity Association for Active Speaker Detection Authors: Rahul Sharma, Shrikanth Narayanan Institution: University of Southern California

[SLT-2023] Push-Pull: Characterizing the Adversarial Robustness for Audio-Visual Active Speaker Detection Authors: Xuanjun Chen, Haibin Wu, Helen Meng, Hung-yi Lee, Jyh-Shing Roger Jang Institution: National Taiwan University; The Chinese University of Hong Kong